Itanium: The Death of Red Hat Linux Support

Announcement

The History

The processor market as basically split between two comodity CISC (Completed Instruction Set Computing) chip makers, Intel (x86) and Motorola (68K) where high-end workstation & server vendors consolidated in Motorola (68K) with PC makers leveraging Intel (x86).

Motorola indicated an end to their 68K line was coming, x86 appeared to be running out of steam. A new concept called RISC (Reduced Instruction Set Computing) was appearing on the scenes. Wholesale migration from Motorola was on, many vendors creating their own very high performance chips based upon this architecture. Various RISC chips were born, created by vendors, adopted by manufacturers, each with their own operating system based upon various open standards.

- SUN/Fujitsu/Ross/(various others) SPARC

- IBM POWER

- HP PA-RISC

- DEC Alpha

- MIPS MIPS (adopted by SGI, Tandem, and various others)

- Motorola 88K (adopted by Data General, Northern Telecom, and various others)

- Motorola/IBM PowerPC (adopted by Apple, IBM, Motorola, and various others)

There was reletively small volume shipments to most vendors of full fledge processors, although the computing prices allowed for continued investment to create increasingly smaller chips to enhance performance. Many of these architectures were cooperative efforts, with cross licensing, to increase volume, and create a viable vendor base. The move to 64 occurred in most of these high-end vendors. As the costs for investment continued to rise, in order to shrink the silicon chip dies, a massive consolidation started to occur, in order to save costs and continue to be profitable.

The desktop market continued to tick away with 32 bit computing at a lower cost, with 2 primary vendors: Intel and AMD.

A massive move to consolidate 64 bit RISC processors from the minority market shareholders from their smaller shares to a common, larger, Intel based 64 bit Itanium VLIW (Very Long Intruction Word) processors. This was a very risky move, since VLIW was a new architecture, and performance was unproven. The consideration by the vendors was Intel had deep enough pockets to fund a new processor. Some of the vendors, who consolidated their architectures into Itanium included:

- HP - PA-RISC

- DEC, purchased by Compaq, Purchased by HP - Alpha

- DEC, purchased by Compaq, Purchased by HP - VAX

- Tandem, purchased by Compaq, Purchased by HP - MIPS

- SGI -> MIPS

Many of the RISC processors did not go away, they just moved to embedded environments, where many of the more complex features of the chips could continue to be dropped, so development would be less costly.

|

| [Sun Microsystems UltraSPARC 2] |

|

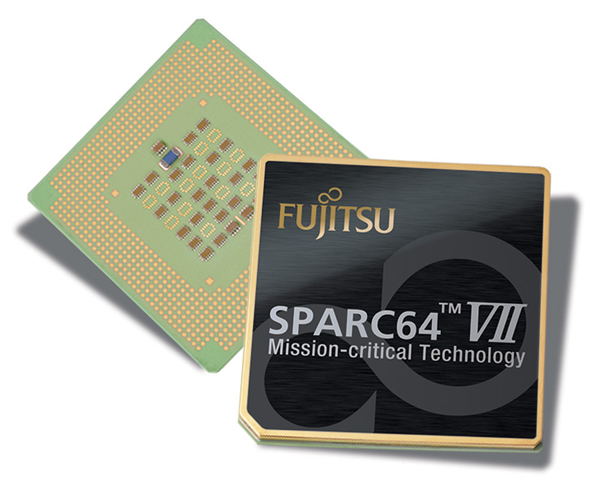

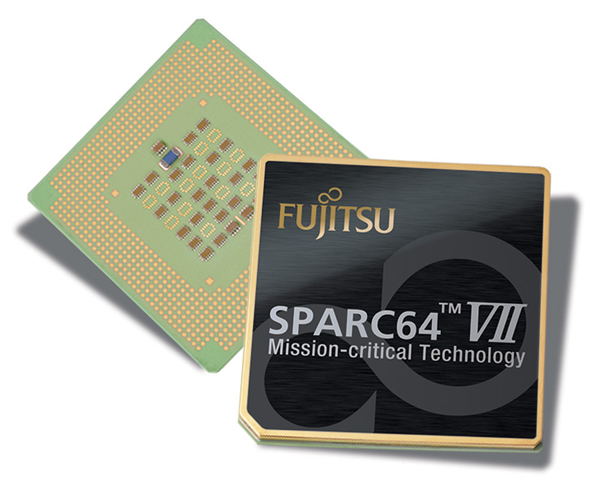

| [Fujitsu SPARC64 VII] |

|

| [IBM Power] |

Majority RISC architecture market share holds in the desktop & server arena seemed to consolidate during the fist decade of 2000 around RISC architectures of an open consortium driven by specifications called SPARC (predominately SUN and Fujitsu) and proprietary final proprietary single vendor drive POWER (predominately IBM)

|

| [AMD Athlon FX 64 Bit] |

AMD later released 64 bit extensions to the aging Intel x86 instructions (which all vendors, including Intel, had basically written off as a dead-end architecture) - creating what the market referred to as "x64". Intel was later forced into releasing a similar processor, competing internally with their Itanium. Much market focus started, consolidating servers onto this proprietary x64 based systems, sapping vitality and market share from RISC and VLIW vendors.

Network Management Implications

Network Management Implications

HP really drove the market to Itanium, after acquiring many companies. There was a large number of operating systems, which needed to be supported internally, so the move to consolidate those operating systems and reduce costs became important.

HP OpenView is one of those key suites of Network Management tools, which people don't get fired for purchasing. HP made announcements of their proprietary operating system

HP-UX, Microsoft proprietary Windows, and open source Linux support for Intel Itanium. HP was never able to get OpenView traction with it under Linux under Itanium or Windows under Itanium, although they were able to provide support for their own proprietary HP-UX platform, as well as Linux under x86 architecture.

With Open Source Red Hat Linux going away on Itanium. Itanium as a 64 bit architecture is clearly taking a severe downturn in the viable 3rd party architectures, and Network Management from OpenView will obviously never become a player in a market that will no longer exist.

The IBM POWER architecture, even though it is one of the last two substantial RISC vendors left, has never really been a substantial vendor in Network Managment arena, even with IBM selling Tivoli Network Management suite. Network Management will most likely never be a substantial power under POWER.

"Mom & Pop" shops run various Network Management systems under Windows, but the number of managed nodes is typically vastly inferior to the larger Enterprise and Managed Services markets. The software just does not scale as well.

Sun SPARC Solaris (with massive vertical and horizontal scalibility) and Red Hat Linux x68 (typically limited to horizontal scalibility) are really the only two substantial multi-vendor Network Management platform players for large Managed Services installations left. Red Hat abandoning HP's Itanium Linux only continues to solidify this position.

.jpg)