SPARC: Road Map Updated!

SPARC: Road Map Updated!The SPARC Road Map has been experiencing updates at a tremendously accelerated pace over the past few years, with new SPARC releases either happening early, with higher performance, or with a combination of the two. It is quite exciting to see SPARC back in the processor game again!

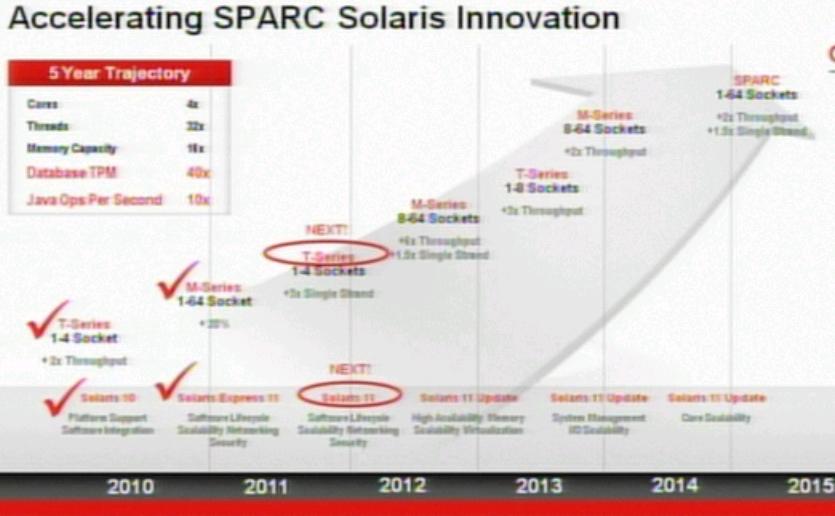

SPARC T3 Launch: SPARC Road MapThe following SPARC road map was revealed after the 16 core SPARC T3 Launch in Q42010.

Solaris 11 Launch: SPARC Road Map

Solaris 11 Launch: SPARC Road MapDuring the Solaris 11 Launch in November 2011, the following was the SPARC road map, reminding the market of the 8 core T4 processor delivery, with the same performance as the former 16 core processor, and enhanced single-threaded performance.

It was also hinted that the SPARC T5 was ahead of schedule at Oracle - shipping in 2012.

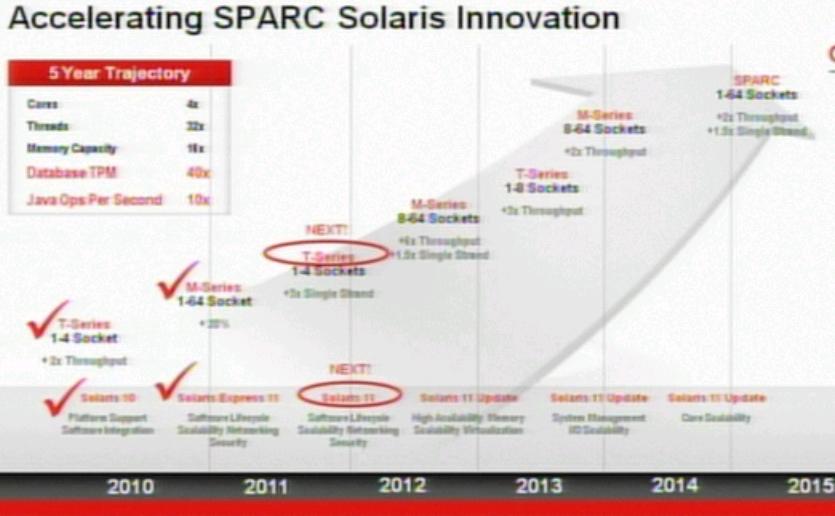

Now, it is February 2012, and the

SPARC road map has officially adjusted (although the exact date of when it occurred is unknown, since there was no official announcement.)

SPARC Road Map Analysis

SPARC Road Map AnalysisNote the accelerated changes in the SPARC road map over the past few months:

- The 8 socket T5? processor will perform well enough to replace the M series 8 socket platform in 2012 and be competitive to reach up tothe 16 socket M series.

- The next 8 socket T5+? processor will perform well enough to replace the M series 8 socket platform in 2013 and be competitive to reach up to the 16 socket M series.

Unified SPARC - T4 ReleaseIt should be noted that

Oracle released a T/M unified processor socket called the "SPARC T4" in Q4 2011, which performed as well (or better, depending on the metrics) as the "SPARC T3" (with 128 threads per socket) which was released in 2010, but T4 halved the cores, doubled the speed (or better) of a T3 thread (with 64 threads per socket) and added a new option where thread speed could be 6x faster (with 8 threads per socket.)

Extrapolations and Remembrance

Extrapolations and RemembranceThe M-Series was out-of-range for many smaller service providers, while the lower-end T series offered the price-performance to be competitive with only mid-range systems, where platform throughput mattered. The recently released T4 offered more competitive single-threaded speed, to eat away at lower-end open-systems market share. The next generation T-Series, expected later this year, will eat into the market share of more expensive higher-end open-systems market with lower-cost higher-socket counts.

Oracle already hinted that the T5 will have some of the features of the former RK or Rock processor (memory versioning looks like relational memory interface.) The addition of hardware compression, columnar database acceleration, Oracle number accelerations, and low latency clustering (at the socket level) will make it a great Oracle RDBMS & Oracle MySQL database accelerator and an outstanding Oracle RDBMS database accelerator - placing SPARC years ahead of POWER and proprietary x86. The competitive benefit to Network Management systems with large embedded databases (i.e. performance management) will be immense.

This is not the first time that adding accelerators gave SPARC a massive boost - the addition of crypto cores inside the T processors made it the fastest single socket HTTPS server on the market for years and the highest performing contender for scalable encrypted polling engines (for managed service provider class network management vendors.) Non-competitive network management service providers avoided the encryption discussion with SSH and SNMPV3 because they could not "keep up" while competitive software providers out-shined their competition on SPARC. With the recent release of Intel's crypto instructions, that benefit is waning for brand new network management service providers. The compression algorithms in conjunction with database accelerators will have come "just-in-time".

Clearly, the investment in the S3 core provided Oracle with the breathing space it needed, to unify the M and T series, with the lower-end SPARC T4 platforms. With the soon-to-be-released SPARC T5 platforms, Oracle will continue to consume the low-hanging-fruit in the M series (in addition to the AIX and HPUX) space with a high-performing SPARC core which scales to greater socket counts.

Final ThoughtsIt appears pretty clear that "

NetMgt.BlogSpot.COM" was the first to break the roadmap update news. Continue to manage your networks with obsession and security!