Making Known the Secrets to Network Management. Raising up a new generation of professionals.

Friday, December 28, 2012

Notable Events: NeXT and Perl

ARS Technica published:

The Legacy of NeXT Lives On in MacOSX

NeXTSTEP technologies still fuel Macs, iPhones, and iPads 16 years later.

The Register published:

The Perl Programming Language Marks 25th Anniversary

Munging data since 1987

Thursday, December 27, 2012

Storage News: December 2012 Update

Oracle: Discusses Tape Storage...

Nanotube Non-Volitile Storage: Nantero NRAM ...

Dell Storage...

Emulex: Storage Network Vendor Buys Network Management Endace...

Red Hat Unites OS to Gluster Clustered Storage...

Advise from The Register: Cisco, Don't Buy NetApp...

Wednesday, December 26, 2012

Networking: 2012 December Update

Cisco to sell off Linksys division; Barclays to Find Buyer

DARPA to Create 100Gb Wireless Skynet

Ethernet Switch Sales Decline, SDN (Software Defined Networks) to Explode...

IBM Integrates Optics onto Silicon...

Tuesday, December 25, 2012

New Technology: 2012 December Update

SSD prices are low—and they'll get lower. MeRAM posed to supplant NAND flash memory...

IBM Integrates Optics onto Silicon...

Sun/Oracle receives patent 8,316,366 on Transactional Threading on November 2012... this came on the heels of a 2011 paper on formally verifying transactional memory on September 2011.

Silence has been from Sun/Oracle VLSI group on Proximity Communications, research funding is due to expire in 2013, is there a product in the future?

Samsung Spends $3.9bn on iPhone Chip Factory in Texas.

Texas Instruments to cut 517 OPAM Smartphone/Tablet Chip Manufacturing jobs in France.

AWS (Amazon Web Services) Hosting Server Retirement Notifications Wanting...

Microsoft Outlook 2013 Willfully Broken: Will Not Recognize .doc or .xls Files

Microsoft Windows 8: Hidden Backup & Clone Feature

Monday, December 24, 2012

Security: 2012 December Update

Microsoft Windows Security Update Breaks Fonts... Update 2753842 Root Cause...

Breaking Windows Passwords in under 6 hours...

New "Dexter" Malware Infects Microsoft Point of Sale Systems to Steal Credit Cards...

Distributed Denial of Service Attacker Anonymous on the Run...

The Pakistan Cyber Army Attacks Chinese and Bangladeshi Web Sites...

ITU: Deep Packet Snooping Standard Leak...

Democrats and Republicans Unite Against ITU Internet Control...

Industrial HVAC systems targeted by hackers...

Microsoft Internet Explorer watching you, even when not open on your screen!

Android Malware Trojan Taints US Mobiles, Spews 500,000 Texts A Day!

Baby got .BAT: Old-school malware terrifies Iran with del *.*; dubbed BatchWiper; found 7 months after Flame discovery

Apple Shifts iTunes to HTTPS, Sidesteps China’s Firewall

Christopher Chaney, Scarlett Johansson's e-mail hacker, sentenced to 10 years

Sunday, December 9, 2012

Made in the U.S.A.

|  |

| Tim Cook 1979 | Apple CEO Tim Cook 2012 |

Made in the U.S.A.

Abstract:

In a recent interview with the Apple CEO Tim Cook, thanks to The Register for the pictures, there was a short discussion on asembling computer systems in the United States. There have also been short articles on which systems are being manufactured closer to the nation of purchase. Debunking of false 1st world manufacturing drivers as well as industry stated drivers for 1st world manufacturing will be reviewed. The United States is not the only nation which will benefit from distributing manufacturing capabilities outside of large 3rd world manufacturing facilities, so they are also worth the discussion.

|

| North America, courtesy: The Lonely Planet |

When the United States was a mere "colony", most of the manufacturing was done in England. Much of this started to change during the Industrialization of America. The most significant sweeps in manufacturing, perhaps occurred during World War 1 and World War 2, when so much of the manufacturing capacities around the world were destroyed.

Honestly, I don't remember anything being manufactured in the United States in recent time, except building materials. Of course, wood based product are increasingly being produced in South America, and dry wall is being increasingly produced in China. A recent trip to Home Depo had made me realize this - and helped me to understand that the next building-boom will not necessarily drive U.S. industry.

|

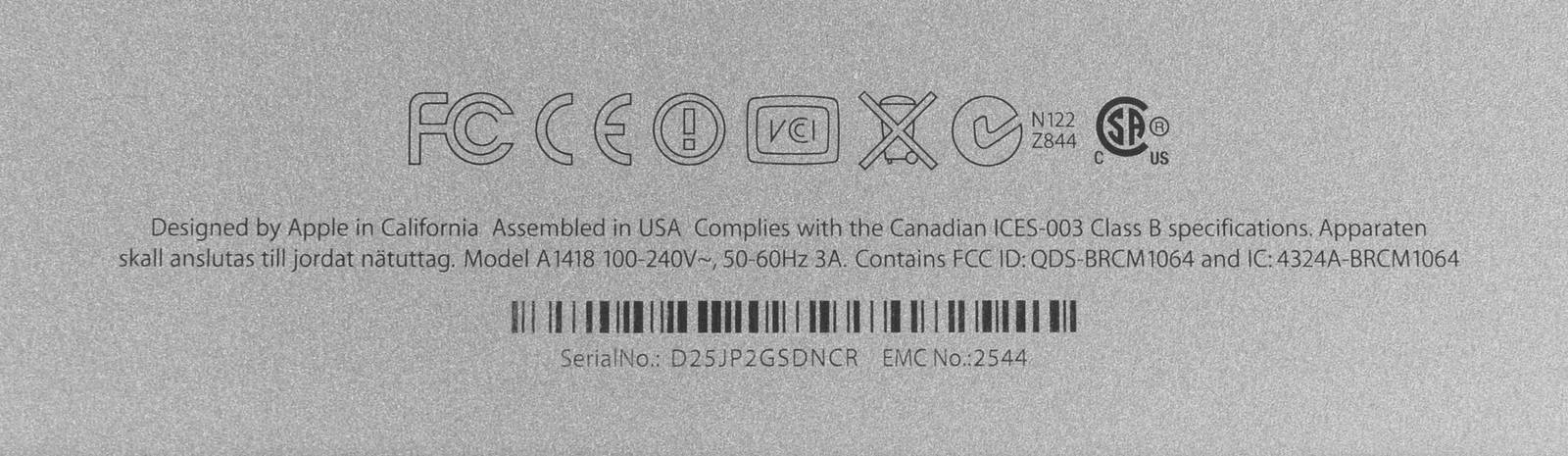

| Apple 2012 21" iMac, courtesy The Register |

During the "tead down" of a 2012 21" iMac, it was noted that the consumer product was assembled in the United States. People have mentions that 2011 Apple iMac's have been assembled in the U.S.A., as well as Mac Pro in 2007, a Mac Mini in 2006, as well as a decade-old Apple Macintosh G3. Computer World mentioned the 1999 Power Mac, the first iMac in 1988, and even the two decade old Apple II.

One of the most interesting quotes from Apple CEO was in regard to manufacting of Apple consumer electronics:

"It's not as much about price," he said, "it's about the skills, etcetera. Over time there are skills that are associated with manufacturing that have left the US."Remembering former CEO of Apple, Steve Jobs, when he was "fired" from Apple, he started a new computer company called "Next", in the 1980's. During that time, they assembled computer systems in an office - but they could never keep up with the demand. Next turned into a "software company" and was later re-purchased by Apple. Computer World may have presented the argument for consumer electronics best:

And in Apple's market, according to Cook, those skills weren't here to begin with. "The consumer electronics world was really never here," he said, "and so it's not a matter of bringing it back, it's a matter of starting it here."

Cook's announcement was both a recognition of real-world manufacturing and a bit of a departure from former CEO Steve Jobs' opinion. In a meeting with President Obama in 2011 just months before his death, Jobs reportedly said, "Those jobs aren't coming back," when the President asked what it would take to make iPhones in the U.S.How will Apple bring more manufacturing to the United States? Well, apparently Apple does not have the know-how to do it themselves, at an economically feasible cost.

Cook said that Apple will invest more than $100 million in the project -- pocket change for a company that has over $120 billion in the bank -- but that it wouldn't do it themselves. "We'll be working with people, and we'll be investing our money," he said.In essence, Tim Cook was right - manufacturing in the United States was, and still is, a difficult endeavour. Regulations are a huge issue, perhaps even a liability issue, that Apple does not feel comfortable dealing with on a larger scale.

|

| Apple 2011 iMac, courtesy arstechnica |

More than Apple?

It seems more computer manufacturering is happening outside of China. A recent article from the New York Times mentions:

Five years ago, he says, H.P. supplied most of Europe’s desktops from China, but today it manufactures in the Czech Republic, Turkey and Russia instead. H.P. sells those kinds of computers particularly to business customers.While not the cost structure of "first world" manufacturing, it is still interesting.

|

| Dumb Reporter for New York Times, courtesy calvinayre |

What exactly drives manufacturing, some may ask?

The notorious New York Times suggests:

Today, rising energy prices and a global market for computers are changing the way companies make their machines.Immediately after making this statement, they went on to use computer consumption in Europe as s supporting argument, which immediately destroys half of the original statement, since Europe is a First World nation and had always consumed computers, thus this "global market" had not changed, from a consumption perspective. This only leaves Energy.

Also in the same article, The Times mentions:

In 1998, President Bill Clinton visited a Gateway Computer factory outside Dublin to cheer the role of American manufacturers in the rise of a “Celtic Tiger” in technology. That plant was shut in 2001, when Gateway elected to save costs by manufacturing in China.Energy was at its lowest point during the Clinton years, and started to rise during the G.W. Bush years, so cheap to rising cost of energy was part of the equation to outsource to China. Energy costs are higher in the 1st world than in the 3rd world, where reduced regulation means more plentiful energy at a lower cost.

If The New York Times can't present a cohesive argument, then what are the incentives?

The Main Drivers for 1st World Manufacturing:

The previous New York Times article mentioned some cohesive points on locality of components. Manufacturers who have major components produced in the 1st world may find advantageous assembly in the 1st world. For apple, the following proves interesting:

Intel, which makes most of the processors, has plants in Oregon, Arizona, New Mexico, Israel, Ireland and China. ... The special glass used for the touch screens of Apple’s iPhone and iPad, however, is an exception. It comes primarily from the United States.However, for cheaper [Android] phones, hand-held games, and laptops, the opposite seems to be the case, manufacturing may never come to the 1st world again:

Many other chip companies design their own products and have them made in giant factories, largely in Taiwan and China. Computer screens are made in Taiwan and South Korea, for the most part.

Time to Market is a huge benefit for one Chinese manufacturers. ComputerWorld also mentioned China's Lenovo manufacturing of laptops in North Carolina in October 2012:

The company, which is based in China, earlier this month announced it would open a factory to make computers in Whitsett, N.C. -- its first such facility in the U.S.

...

Manufacturing in the U.S. will help Lenovo get its products to customers more quickly, said Peter Hortensius, senior vice president of the product group at Lenovo...The company will manufacture ThinkPad laptops and tablets starting early next year, and with the new factory, Lenovo hopes computers could reach customers within a week, or in some cases, overnight. But initial supplies of products like the ThinkPad Tablet 2, which will become available in October, will not be made in the U.S. factory.

...

Many Lenovo computer shipments originate from China and are supposed to reach customers in 10 days, but in some cases take weeks. The company also has factories in Japan, Brazil, Germany and Mexico.

The same article in ComputerWorld also dicusses Lenovo's desire to appear to patriotism for market penetration:

The "Made in USA" tag on computers manufactured in North Carolina will resonate with some buyersIn some cases, it may be tax incentives, but The New York Times suggested that is not enough, by placing the failure in the same sentence as the reason.

A Dell factory in Winston-Salem, N.C., for which Dell received $280 million in incentives from the government, was shut in 2010 (Dell had to repay some of the incentives).

There are clearly drivers for 1st world manufacturing.

|

| President Obama, courtesy Carnagie Mellon School of Computer Science |

This is always the question. Perhaps, this is best mentioned at the end of the New York Times article...

As cheap as a Chinese assembly worker may be, an emerging trend in manufacturing, specialized robots, promises to be even cheaper. The most valuable part of the computer, a motherboard loaded with microprocessors and memory, is already largely made with robots.The ultimate answer is, there are not many good high-wage jobs in manufacturing any longer. This was painfully made clear during President Obama's campaign tour across the country - everywhere he went, he talked about manufacturing.

|

| U.S. Manufacturing Jobs during Obama Presidency, courtesy The American Thinker |

Computer manufacturing will not bring it back.

Tuesday, December 4, 2012

Solaris 10: Using Postgres (Part 2)

|

| (Postgres Logo) |

Solaris had long been the operating system for performing managed services in the telecommunication arena. During a time when Oracle priced Solaris out of the market by charging a higher fee for similarly performing hardware than other competitors, Sun Microsystems started bundling Postgres and later purchased MySQL for bundling. Postgres is a simple, easy to enable, royalty free database available for Solaris. This article will discuss using the Solaris 10 bundled Postgres database.

Setting Up Postgres

The first article in this series, Solaris 10: Using Postgres (Part 1), discusses how to enable a reasonable 64 bit Posrgres database bundled with Solaris 10, preparing the first user, as well as running the first command line access.

Creating a Table

References

[html] Creating a Table

[html] Populating a Database Notes

[html] SQL Copy Data Into or From Table

[html] SQL Insert Into Table Command

[html] DML Inserting Data Into a Table

[html] SQL Update Data in Table

[html] SQL Delete From Table Command

[html] SQL Truncate Data in Table

[html] Insert or Update PG-SQL Expand 38-1

[html] Database Maintenance Through Vacuum

Labels:

create table,

delete,

Insert,

PostGres,

PostgreSQL,

psql,

SQL,

update

Monday, December 3, 2012

Solaris 10: Using Postgres (Part 1)

|

| (Postgres Logo) |

Solaris had long been the operating system for performing managed services in the telecommunication arena. During a time when Oracle priced Solaris out of the market by charging a higher fee for similarly performing hardware than other competitors, Sun Microsystems started bundling Postgres and later purchased MySQL for bundling. Postgres is a simple, easy to enable, royalty free database available for Solaris. This article will discuss setting up the Solaris 10 bundled Postgres database.

|

| (Sun Microsystems Logo) |

History:

From the first pages of the PostgreSQL documentation:

The object-relational database management system now known as PostgreSQL is derived from the POSTGRES package written at the University of California at Berkeley. With over a decade of development behind it, PostgreSQL is now the most advanced open-source database available anywhere. The POSTGRES project, led by Professor Michael Stonebraker, was sponsored by the Defense Advanced Research Projects Agency (DARPA), the Army Research Office (ARO), the National Science Foundation (NSF), and ESL, Inc. The implementation of POSTGRES began in 1986.Postgres has existed a long time, from the same roots as Berkeley UNIX, the original base operating system for Sun Microsystem's Solaris.

|

| (Sun Solaris Logo) |

Versions:

Under Solaris 10, Sun Microsystems bundled Postgres. Basic directory structures are as follows:

V240/root$ ls -la /*r/postgresUnder Solaris 10, Postgres 8.2 and 8.3 are shipped. With 8.3, both 32 and 64 bit versions.

/usr/postgres:

total 12

drwxr-xr-x 6 root bin 512 Jan 2 2010 .

drwxr-xr-x 44 root sys 1024 Mar 6 2010 ..

drwxr-xr-x 10 root bin 512 Jan 2 2010 8.2

drwxr-xr-x 9 root bin 512 Jan 2 2010 8.3

drwxr-xr-x 2 root bin 512 Jan 2 2010 jdbc

drwxr-xr-x 6 root bin 512 Jan 2 2010 upgrade

/var/postgres:

total 8

drwxr-xr-x 4 postgres postgres 512 Jan 2 2010 .

drwxr-xr-x 51 root sys 1024 Nov 10 2010 ..

drwxr-xr-x 4 postgres postgres 512 Jan 2 2010 8.2

drwxr-xr-x 5 postgres postgres 512 Jan 2 2010 8.3

(It should be noted that with Postgres 8.3, community support is projected to end in 2012.)

File System Locations:

Before using Postgres, it may be advisable to mount additional disks in a ZFS pool and mount them. This is not strictly the "correct" way to set up a set of database directories, but for a small system where root disks are mirrored and a second set of mirrored disks are used for applications, it will be adequate.

V240/root$ zfs create zpool1/pg_8_3_backups

V240/root$ zfs create zpool1/pg_8_3_data

V240/root$ zfs create zpool1/pg_8_3_data_64

V240/root$ zfs set mountpoint=/var/postgres/8.3/backups zpool1/pg_8_3_backups

V240/root$ zfs set mountpoint=/var/postgres/8.3/data zpool1/pg_8_3_data

V240/root$ zfs set mountpoint=/var/postgres/8.3/data_64 zpool1/pg_8_3_data_64

V240/root$ zfs list

NAME USED AVAIL REFER MOUNTPOINT

zpool1 1.92G 65.0G 1.92G /u001

zpool1/pg_8_3_backups 21K 65.0G 21K /var/postgres/8.3/backups

zpool1/pg_8_3_data 21K 65.0G 21K /var/postgres/8.3/data

zpool1/pg_8_3_data_64 21K 65.0G 21K /var/postgres/8.3/data_64

V240/root$ cd /var/postgres/8.3

V240/root$ chown -R postgres:postgres *

The final 2 steps are critical, if ZFS file systems will be mounted and used, the default ownership is "root" and the starting process will fail if those ZFS directories are not owned by the dba "postgres".

When starting 8.3 version of Postgres, the data should now be stored on zpool1 application pool.

Services:

Postgres is a first-class citizen under Solaris 10. There are no start/sop scripts needed to be written - they are pre-bundled as a variety of services within Solaris Service Management Facility (SMF).

V240/root$ svcs "*postgres*"The database version of choice can be enabled through SMF.

STATE STIME FMRI

disabled 12:49:12 svc:/application/database/postgresql:version_82

disabled 12:49:12 svc:/application/database/postgresql:version_82_64bit

disabled 12:49:12 svc:/application/database/postgresql_83:default_32bit

disabled 12:49:12 svc:/application/database/postgresql:version_81

disabled 12:49:13 svc:/application/database/postgresql_83:default_64bit

Review Database Owner:

Solaris comes with role based access to Postgres pre-installed. They should look similar to the following:

V240/root$ grep postgres /etc/passwd /etc/user_attr /etc/security/exec_attrWith the permissions and dba account set up correctly, it should be ready to start.

/etc/passwd:postgres:x:90:90:PostgreSQL Reserved UID:/:/usr/bin/pfksh

/etc/user_attr:postgres::::type=role;profiles=Postgres Administration,All

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/initdb:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/ipcclean:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/pg_controldata:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/pg_ctl:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/pg_resetxlog:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/postgres:uid=postgres

/etc/security/exec_attr:Postgres Administration:solaris:cmd:::/usr/postgres/8.2/bin/postmaster:uid=postgres

Starting Postgres:

The Postgres database can be started from the dba user.

V240/user$ su - root

Password:

V240/root$ su - postgres

V240/postgres$ svcadm enable svc:/application/database/postgresql_83:default_64bit

V240/postgres$ svcs "*postgres_83:default_64bit"

STATE STIME FMRI

offline* 0:43:27 svc:/application/database/postgresql_83:default_64bitsvcs: Pattern

V240/postgres$ svcs "*postgresql_83:default_64bit"It may take a couple of minutes to start up for the first time, since many files from a sample database will need to be copied into the new directory structure, and onto the ZFS file systems.

STATE STIME FMRI

online 0:43:37 svc:/application/database/postgresql_83:default_64bit

Setting Up Sample Role/User, Database, and Client Access:

By default, all authenticated users are allowed to leverage the Postgres database under Solaris, but only on the same host. The default version of Postgres may be older than the version you wish to use.

V240/ivadmin$ type createdb psqlSince Solaris supports multiple versions of Postgres, it is important to set paths before using commands. A command should be used in the top of any script which runs Postgres or any command prompt where the user is intending on performing a lot of Postgres work.

createdb is /usr/bin/createdb

psql is /usr/bin/psql

V240/ivadmin$ psql --version

psql (PostgreSQL) 8.1.18

contains support for command-line editing

V240/ivadmin$ createdb --version

createdb (PostgreSQL) 8.1.18

V240/user$ PATH=/usr/postgres/8.3/bin:$PATHA privileged "role" (or "user") can set up a database and client access from another "user" or "role". The "createuser" binary is a wrapper around the "CREATE ROLE" command in Postgres.

V240/user$ export PATH

In the case below, a new non-superuser (-S) will be created, which can create databases ("-d"), be restricted from creating new "roles" or "users" (-R), and log into the database ("-l"). Also, the binary command will echo the postgres command used ("-e"), for clarity sake.

V240/user$ su - root

Password:

V240/root$ su - postgres

V240/postgres$ PATH=/usr/postgres/8.3/bin:$PATH

V240/postgres$ export PATH

V240/postgres$ createuser -S -d -R -l -e user

CREATE ROLE user NOSUPERUSER CREATEDB NOCREATEROLE INHERIT LOGIN;

(The addition of the proper path was used, in case it is not set up globally on the platform.)

The creation of the database can now be done by the Solaris user "user", which is also Postgres "role". By default, the name of the database is the same name as the "user".

V240/user$ PATH=/usr/postgres/8.3/bin:$PATH

V240/user$ export PATH

V240/ivadmin$ createdb -e

CREATE DATABASE user;

After the database is created, the

V240/ivadmin$ psqlThe process of creating objects in the database can now take place.

Welcome to psql 8.3.8, the PostgreSQL interactive terminal.

Type: \copyright for distribution terms

\h for help with SQL commands

\? for help with psql commands

\g or terminate with semicolon to execute query

\q to quit

user=>

Client Access Error:

If the user has never created a database, the first attempt access attempt will present an error such as:

V240/user$ psql

psql: FATAL: database "user" does not exist

This indicates that a database must be created for that user.

Creating Database Error:

The "createdb" executable is a binary wrapper around the "create database" Postgres command. Databases are created by "cloning" a standard database template. If a database is created before the role is created, an error such as the following is presented:

V240/user$ createdbBefore a database can be created, a user must be able to do this.

createdb: could not connect to database postgres: FATAL: role "user" does not exist

Creating Role Error:

A "role" is sometimes referred to as a "user". The Solaris user name is often tied directly as the "role". If the user is not privileged, the following error is presented:

V240/ivadmin$ createuserA privileged user must create new "roles". Under Solaris, this is the "postgres" user.

Enter name of role to add: user

Shall the new role be a superuser? (y/n) n

Shall the new role be allowed to create databases? (y/n) n

Shall the new role be allowed to create more new roles? (y/n) n

createuser: could not connect to database postgres: FATAL: role "user" does not exist

Resources:

Other Postgres resources are noted below:

[html] PostgreSQL 8.3.21 Documentation

[html] Dynamic Tracing of PostgreSQL via DTrace (in 8.3)

[pdf] Availability of PostgreSQL in the Data Center

[html] 2010-05 Setting up PostgreSQL under Solaris 10

[pdf] 2008-?? - Best Practices with PostgreSQL on Solaris

[html] 2005-11 - Tuning PostgreSQL under Solaris x64

[html] 2005-05 - Tuning Write Performance of PostgreSQL on Solaris

[html] 2005-04 - Tuning Solaris for PostgreSQL Read and Write Performance (8.0.2)

Labels:

Oracle,

PostGres,

PostgreSQL,

psql,

Service Management Facility,

smf,

Solaris,

Solaris 10,

Sun,

Sun Microsystems,

svcadm,

svcs

Friday, November 30, 2012

"The UNIX System" from the AT&T Archives

Film (produced in 1982) includes Brian Kernighan demonstrating how to write a spell check command in shell, interviews with Ken Thompson, Dennis Ritchie, and much more.

Labels:

ATT,

Brian Kernighan,

Dennis Ritchie,

Ken Thompson

MartUX: Tab Updated!

For those of you waiting for consolidated information on the SVR4 SPARC OpenIndiana iniative - MartUX now has a Network Management Tab!

A copy of it is now found here:

MartUX is Martin Bochnig's SVR4 release of OpenIndiana on SPARC. MartUX was based upon OpenSolaris. Originally released only on sun4u architecture, it now also runs as a Live-DVD under sun4v.

[http] MartUX Announcement: September 27, 2012

[http] MartUX OpenIndiana 151 Alpha Live-DVD Wiki

[http] Martin Bochnig's Blog for MartUX, OpenIndiana SPARC port from OpenSolaris

[http] Martin Bochnig Twitter account

[http] MartUX site (down since storage crash)

[http] MartUX site snapshot from archive.org

[html] Network Management MartUX articles

A copy of it is now found here:

MartUX is Martin Bochnig's SVR4 release of OpenIndiana on SPARC. MartUX was based upon OpenSolaris. Originally released only on sun4u architecture, it now also runs as a Live-DVD under sun4v.

[http] MartUX Announcement: September 27, 2012

[http] MartUX OpenIndiana 151 Alpha Live-DVD Wiki

[http] Martin Bochnig's Blog for MartUX, OpenIndiana SPARC port from OpenSolaris

[http] Martin Bochnig Twitter account

[http] MartUX site (down since storage crash)

[http] MartUX site snapshot from archive.org

[html] Network Management MartUX articles

Thursday, November 29, 2012

TribbliX: Tab Updated!

For those waiting for consolidated information - TribbliX now has a consolidated tab on Network Management blog!

A current snapshot can be seen here:

Tibblix is Peter Tribble's incarnation of an SVR4 based OS, leveraging Illumos at the core. This is an Intel only distribution at this point. Tribblix is a brand-new pre-release, downloaded as a live-dvd, and based upon OpenIndiana.

[html] Tribblix Announcement: October 23, 2012

[html] Distribution Home Page

[html] Download Page

[html] Installation Guide

[html] Basic Usage Starting Pointers

[html] Peter Tribble Blog

[html] Peter Tribble Solaris Desktop Blog

[html] Network Management Tribblix Posts

A current snapshot can be seen here:

Tibblix is Peter Tribble's incarnation of an SVR4 based OS, leveraging Illumos at the core. This is an Intel only distribution at this point. Tribblix is a brand-new pre-release, downloaded as a live-dvd, and based upon OpenIndiana.

[html] Tribblix Announcement: October 23, 2012

[html] Distribution Home Page

[html] Download Page

[html] Installation Guide

[html] Basic Usage Starting Pointers

[html] Peter Tribble Blog

[html] Peter Tribble Solaris Desktop Blog

[html] Network Management Tribblix Posts

Wednesday, November 28, 2012

MartUX: Second SVR4 SPARC Release!

MartUX History: MarTux is an OpenSolaris based desktop, created by Martin Bochnig, and released for SPARC platforms. It was originally released in October of 2009, billed as the first distribution on SPARC, but only on sun4u architectures. An early screenshot is here. Some of the early work proved difficult in compressing OpenSolaris onto a single live DVD, but with the help of the BeliniX community, the work was successful. A sun4v release would soon follow.

MartUX - The New Work:

In July 2012, Martin started his new work, of updating MartUX on SPARC. In August, Martin indicated he was "working day and night" to continue progress on a new distribution cut. The question was broached on the SPARC distribution again, in September. The shift from IPS to Live-DVD occurred, in order to release a product. People discuss buying of older SPARC hardware and reserving some equipment, in preparation for the new distribution. Martin posts some screenshots of the new distribution on September 10. Discussions about under 10% savings between gzip-5 and gzip-9 occur, and the drawback of great CPU usage during the packaging phase. By September 27, a MartUX DVD ISO was made available.

MartUX - September 2012 Release:

By the end of September of 2012, there was an indication a new distribution based upon OpenSolaris code base and OpenIndiana. OpenIndiana now uses a new living codebase of Illumos to augment the static code base of OpenSolaris. The OpenIndiana community had screenshots and instructions noted on their 151a release wiki of the MartUX LiveDVD. A short, but useful articles appeared on some of the industry wires such as Phoronix. MartUX OpenIndiana SPARC release is also now booting on the first sun4v or newer "T" architectures from Sun/Oracle!

Tragedy Strikes:

October 4 hits, Martin starts the process of formalizing the distribution. The goal is to move from his previous MartUX distribution name. Martin, however, had a catastrophic disk failure. His struggling was noted on a different list, but he comments about it on the OpenIndiana list, suggesting using triple-mirrors, and disconnecting the third mirror to ensure a solid backup.

Where's Martin?

Martin mentioned on October 2nd through twitter that Dave Miller was working on an installer and pkgadd. There was little sign of Martin posting in November on the OpenIndiana list, after his hard disk issues, but an occasional tweet here or there. Martin set up a separate blogspot for SPARC OI work. The martux.org web site is down, as of the end of November, although a snapshot of the content is still available on archive.org. We hope all is well, as he continues his unbelievable work on a SPARC release.

MartUX - The New Work:

In July 2012, Martin started his new work, of updating MartUX on SPARC. In August, Martin indicated he was "working day and night" to continue progress on a new distribution cut. The question was broached on the SPARC distribution again, in September. The shift from IPS to Live-DVD occurred, in order to release a product. People discuss buying of older SPARC hardware and reserving some equipment, in preparation for the new distribution. Martin posts some screenshots of the new distribution on September 10. Discussions about under 10% savings between gzip-5 and gzip-9 occur, and the drawback of great CPU usage during the packaging phase. By September 27, a MartUX DVD ISO was made available.

MartUX - September 2012 Release:

By the end of September of 2012, there was an indication a new distribution based upon OpenSolaris code base and OpenIndiana. OpenIndiana now uses a new living codebase of Illumos to augment the static code base of OpenSolaris. The OpenIndiana community had screenshots and instructions noted on their 151a release wiki of the MartUX LiveDVD. A short, but useful articles appeared on some of the industry wires such as Phoronix. MartUX OpenIndiana SPARC release is also now booting on the first sun4v or newer "T" architectures from Sun/Oracle!

Tragedy Strikes:

October 4 hits, Martin starts the process of formalizing the distribution. The goal is to move from his previous MartUX distribution name. Martin, however, had a catastrophic disk failure. His struggling was noted on a different list, but he comments about it on the OpenIndiana list, suggesting using triple-mirrors, and disconnecting the third mirror to ensure a solid backup.

Where's Martin?

Martin mentioned on October 2nd through twitter that Dave Miller was working on an installer and pkgadd. There was little sign of Martin posting in November on the OpenIndiana list, after his hard disk issues, but an occasional tweet here or there. Martin set up a separate blogspot for SPARC OI work. The martux.org web site is down, as of the end of November, although a snapshot of the content is still available on archive.org. We hope all is well, as he continues his unbelievable work on a SPARC release.

Labels:

Illumos,

Martin Bochnig,

MartUX,

OpenIndiana,

OpenSolaris

Tuesday, November 27, 2012

Tribblix: Second SVR4 Intel Release!

Tribblix Update!

Network Management announced the pre-release of Tribblix at the end of October 2012. There is a new release which happened early November 2012.

Note - the user community can now download an x64 version called 0m1.

Oh, how we are waiting for a SVR4 SPARC release!

Wednesday, November 21, 2012

SPARC at 25: Past, Present and Future (Replay)

In October, Network Management published a reminder concerning:

If you missed it, you can view the replay here, thanks to YouTube!

Thursday, November 1, 2012 Event: 11:00 AM

Lunch: 12:00 Noon

Computer History Museum

1401 North Shoreline Blvd.

Mountain View, California 94043

SPARC at 25: Past, Present and Future

Presented by the Computer History Museum Semiconductor Special Interest Group

If you missed it, you can view the replay here, thanks to YouTube!

| Panelists presenting include industry giants: Andy Bechtolsheim, Rick Hetherington, Bill Joy, David Patterson, Bernard Lacroute, Anant Argawal |

Thursday, November 1, 2012 Event: 11:00 AM

Lunch: 12:00 Noon

Computer History Museum

1401 North Shoreline Blvd.

Mountain View, California 94043

Monday, November 19, 2012

ZFS, Metaslabs, and Space Maps

ZFS, Metaslabs, and Space Maps

Abstract:

File systems are a memory structure which represents data, often stored on persistent media such as disks. For decades, the standard file system used by multi-vendor operating systems was the Unix File System, or UFS. While this file system served well for decades, storage continued to grow exponentially, and existing algorithms could not keep up. With the opportunity to re-create the file system, Sun Microsystems worked the underlying algorithms to create ZFS - but different data structures were used, unfamiliar to the traditional computer scientist.

Space Maps:

One of the most significant driving factors to massive scalability is dealing with free space. Jeff Bonwick of Sun Microsystems, now of Oracle, described some of the challenges to existing space mapping algorithms about a half-decade ago.

Bitmaps

The most common way to represent free space is by using a bitmap. A bitmap is simply an array of bits, with the Nth bit indicating whether the Nth block is allocated or free. The overhead for a bitmap is quite low: 1 bit per block. For a 4K blocksize, that's 1/(4096\*8) = 0.003%. (The 8 comes from 8 bits per byte.)

For a 1GB filesystem, the bitmap is 32KB -- something that easily fits in memory, and can be scanned quickly to find free space. For a 1TB filesystem, the bitmap is 32MB -- still stuffable in memory, but no longer trivial in either size or scan time. For a 1PB filesystem, the bitmap is 32GB, and that simply won't fit in memory on most machines. This means that scanning the bitmap requires reading it from disk, which is slower still.

Clearly, this doesn't scale....B-trees

Another common way to represent free space is with a B-tree of extents. An extent is a contiguous region of free space described by two integers: offset and length. The B-tree sorts the extents by offset so that contiguous space allocation is efficient. Unfortunately, B-trees of extents suffer the same pathology as bitmaps when confronted with random frees....Deferred frees

One way to mitigate the pathology of random frees is to defer the update of the bitmaps or B-trees, and instead keep a list of recently freed blocks. When this deferred free list reaches a certain size, it can be sorted, in memory, and then freed to the underlying bitmaps or B-trees with somewhat better locality. Not ideal, but it helps....Space maps: log-structured free lists

Recall that log-structured filesystems long ago posed this question: what if, instead of periodically folding a transaction log back into the filesystem, we made the transaction log be the filesystem?

Well, the same question could be asked of our deferred free list: what if, instead of folding it into a bitmap or B-tree, we made the deferred free list be the free space representation?That is precisely what ZFS does. ZFS divides the space on each virtual device into a few hundred regions called metaslabs. Each metaslab has an associated space map, which describes that metaslab's free space. The space map is simply a log of allocations and frees, in time order. Space maps make random frees just as efficient as sequential frees, because regardless of which extent is being freed, it's represented on disk by appending the extent (a couple of integers) to the space map object -- and appends have perfect locality. Allocations, similarly, are represented on disk as extents appended to the space map object (with, of course, a bit set indicating that it's an allocation, not a free)....

When ZFS decides to allocate blocks from a particular metaslab, it first reads that metaslab's space map from disk and replays the allocations and frees into an in-memory AVL tree of free space, sorted by offset. This yields a compact in-memory representation of free space that supports efficient allocation of contiguous space. ZFS also takes this opportunity to condense the space map: if there are many allocation-free pairs that cancel out, ZFS replaces the on-disk space map with the smaller in-memory version.

Metaslabs:

One may as, what exactly is metaslab and how does it work?

Adam Levanthal, formerly of Sun Microsystems, now at Delphix working on an open-source ZFS and DTrace implementations, describes metaslabs in a little more detail:

For allocation purposes, ZFS carves vdevs (disks) into a number of “metaslabs” — simply smaller, more manageable chunks of the whole. How many metaslabs? Around 200.

Why 200? Well, that just kinda worked and was never revisited. Is it optimal? Almost certainly not. Should there be more or less? Should metaslab size be independent of vdev size? How much better could we do? All completely unknown.

The space in the vdev is allotted proportionally, and contiguously to those metaslabs. But what happens when a vdev is expanded? This can happen when a disk is replaced by a larger disk or if an administrator grows a SAN-based LUN. It turns out that ZFS simply creates more metaslabs — an answer whose simplicity was only obvious in retrospect.

For example, let’s say we start with a 2T disk; then we’ll have 200 metaslabs of 10G each. If we then grow the LUN to 4TB then we’ll have 400 metaslabs. If we started instead from a 200GB LUN that we eventually grew to 4TB we’d end up with 4,000 metaslabs (each 1G). Further, if we started with a 40TB LUN (why not) and grew it by 100G ZFS would not have enough space to allocate a full metaslab and we’d therefore not be able to use that additional space.

Fragmentation:

I remember reading on the internet all kinds of questions such as "is there a defrag utility for ZFS?"

While the simple answer is no, it seems the management of metaslabs inherent to ZFS attempts to mitigate fragmentation, by rebuilding the freespace maps quite regularly, and offers terrific improvements in performance as file systems become closer to being full.

Expand and Shrink a ZFS Pool:

Expansion of a ZFS pool is easy. One can swap out disks or just add them on.

What trying to shrink a file system? This may be, perhaps, the most significant heart-burn with ZFS. Shrinkage is missing.

Some instructions on how RAID restructure or shrinkage could be done were posted by Joerg at Sun Microsystems, now Oracle, but shrinkage requires additional disk space (which is what people are trying to get, when they desire a shrink.) Others have posted procedures for shrinking root pools.

One might speculate the existence (and need to reorganize of destroy) the metaslab towards the trailing edges of the ZFS file system pool may introduce the complexities, since these are supposed to only grow, and hold data sequentially in time order.

Network Management Implications:

I am at the point now, where I have a need to divide up a significant application pool into smaller pools, to create a new LDom on one of my SPARC T4-4's. I need to fork off a new Network Management instance. I would love for the ability to shrink zpools in the near future (Solaris 10 Update 11?) - but I am not holding out much hope, considering ZFS existed over a half-decade, there has been very little discussion on this point, and how metslabs and freespace are managed.

Wednesday, November 14, 2012

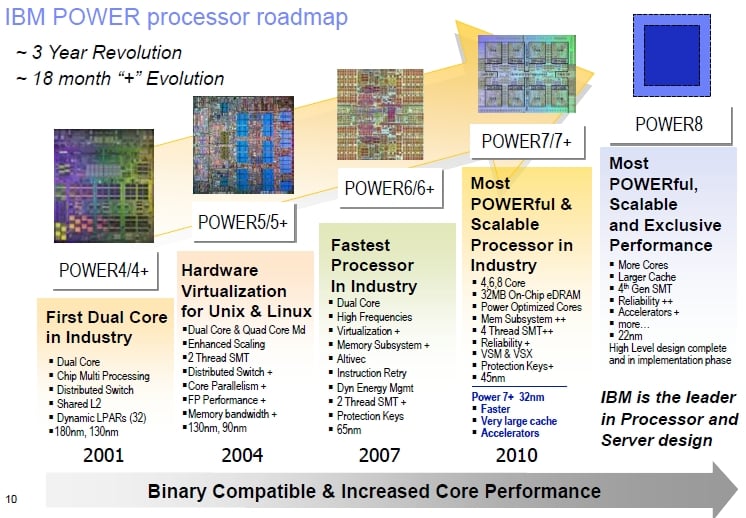

IBM POWER 7+ Better Late than Never?

Anticipation of POWER 7+

In August 2011, when Oracle released the SPARC T4, IBM decided to release something, but it was not a CPU chip, but a roadmap... problem was - IBM POWER 7+ was late.

Not only was it late, but apparently it would be VERY late. In a previous Network Management article:

In August 2011, when Oracle released the SPARC T4, IBM decided to release something, but it was not a CPU chip, but a roadmap... problem was - IBM POWER 7+ was late.

|

| Courtesy, The Register |

Thank you for the IBM August 2011 POWER Roadmap tha the public marketplace has been begging for... did we miss the POWER7+ release??? A POWER 7 February 2010 launch would have POWER 7+ August 2011 launch (and today is August 31, so unless there is a launch in the next 23 hours, it looks late to me.)

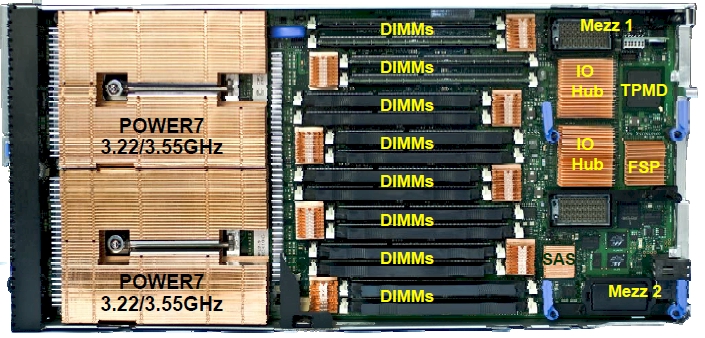

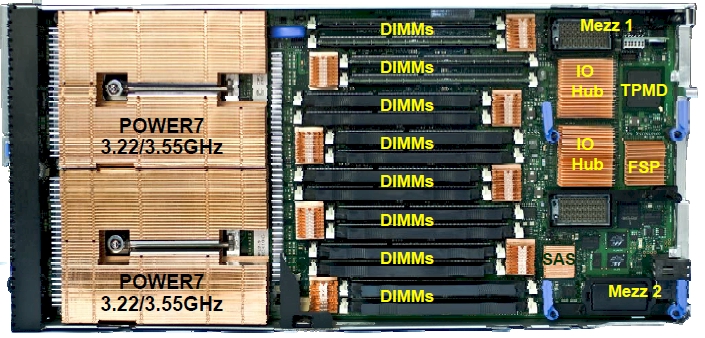

|

| IBM Flex p260 CPU Board, courtesy The Register |

Disposition of POWER 7+

Just announced, via TPM at The Register, is the ability to provision an IBM p260 with new POWER 7+ processors!

IBM finally released POWER 7+, in one system, 15 months late. How underwhelming. |

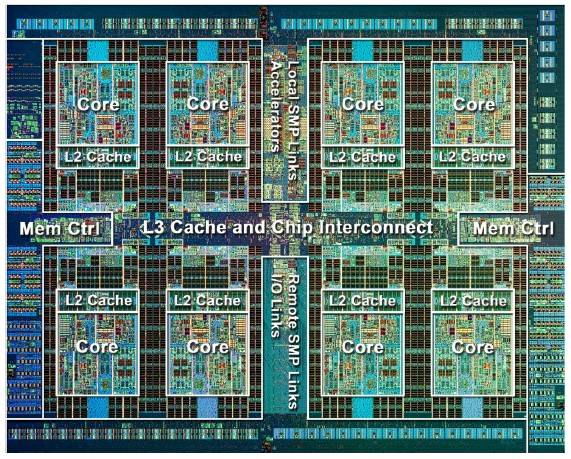

| IBM POWER 7+ die image, courtsy The Register |

Disposition of POWER 8

The question that no one is asking: Where is POWER 8, according to the IBM roadmap?

The POWER 8, according to the roadmap, should be released roughly February of 2013. Why release the POWER 7+ just 3 months shy of releasing the POWER 8? This suggests a problem for IBM.

The 2013 timeframe is roughly when Oracle suggested they may start releasing SPARC T5 platforms, ranging from 1 to 8 sockets. The POWER 8 surprisingly has a lot of common features with the SPARC T5 -the POWER 8 may have gone back to the drawing board to copy SPARC T5. How late is POWER 8?

Conclusions:

Whatever happened, something broke at IBM. For a piece of silicon to be delayed 15 months, IBM must have needed to bring POWER 7+ back to the proverbial drawing board. The big question is - did IBM need to bring POWER 8 back to the drawing board, as well?

Automatic Storage Tiering

|

| Image courtesy: WWPI. |

Abstract:

Automatic Storage Tiering or Hierarchical Storage Management is the process of placing the most data onto storage which is most cost effective, while meeting basic accessibility and efficient requirements. There has been much movement over the past half-decade in storage management.

Early History:

When computers were first being built on boards, RAM (most expensive) held the most volatile data while ROM held the least changing data. EPROM's provided a way for users to occasionally change mostly static data (requiring a painfully slow erasing mechanism using light and special burning mechanism using high voltage), placing the technology in a middle tier. EEPROM's provided a simpler way to update data on the same machine, without removing the chip for burning. Tape was created, to archive storage for longer periods of time, but it was slow, so it went to the bottom of the capacity distribution pyramid. Rotating disks (sometimes referred to as rotating rust) was created, taking a middle storage tier. As disks became faster, the fastest disks (15,000 RPM) moved towards the upper part of the middle tier while slower disks (5,400RPM or slower) moved towards the bottom part of the middle tier. Eventually, consumer-grade (not very reliable) IDE and SATA disks became available, occupying the higher areas of the lowest tier, slightly above tape.

Things seemed to settle out for awhile in the storage hierarchy; RAM, ROM, EEPROM, 15K FibreChannel/SCSI/SAS Disks, 7.2K FibreChannel/SCSI/SAS Disks, IDE/SATA, Tape - until the creation of ZFS by Sun Microsystems.

|

| Logo Sun Microsystems |

In the early 2000's, Sun Microsystems started to invest more in flash technology. They anticipated a revolution in storage management, with the increase performance of a technology called "Flash", which is little more than EEPROM's. These became known as Solid State Drives or SSD's. In 2005, Sun released ZFS under their Solaris 10 operating system and started adding features that included flash acceleration.

There was a general problem that Sun noticed: flash was either cheap with low reliability (Multi-Level Cell or MLC) or expensive with higher reliability (Single-Level Cell or SLC). It was also noted that flash was not as reliable as disks (for many write environment.) The basic idea was to turn automatic storage management on it's head with the introduction of flash to ZFS under Solaris: RAM via ARC (Adaptive Read Cache), Cheap Flash via L2ARC (Level 2 Adaptive Read Cache), Expensive Flash via Write Log, Cheap Disks (protected with disk parity & CRC's at the block level.)

|

| Sun Funfire 4500 aka Thumper, courtesy Sun Microsystems |

For the next 5-7 years, the industry experienced hand-wringing with what to do with the file system innovation introduced by Sun Microsystems under Solaris ZFS. More importantly, ZFS is a 128 bit file system, meaning massive data storage is now possible, on a scale that could not be imagined. The OS and File System were also open-sourced, meaning it was a 100% open solution.

With this technology, built into all of their servers, This became increasingly important as Sun released mid-range storage systems based upon this technology with a combination of high capacity, lower price, and higher speed access. Low end solutions based upon ZFS also started to hit the market, as well as super-high-end solutions in MPP clusters.

|

| Drobo-5D, courtesy Drobo |

Recent Vendor Advancements - Drobo:

A small-business centric company called Data Robotics or Drobo released a small RAID system with an innovative feature: add drives of different size, system adds capacity and reliability on-the-fly, with the loss of some disk space. While there is some disk space loss noted, the user is protected against drive failure on any drive in the RAID, regardless of size, and any size drive could replace the bad unit.

|

| Drobo-Mini, courtesy Drobo |

The challenge with Drobo - it is a proprietary hardware solution, sitting on top of a fairly expensive hardware, for the low to medium end market. This is not your $150 raid box, where you can stick in a couple of drives and go.

Recent Vendor Advancements - Apple:

Not to be left out, Apple was going to bundle ZFS into every Apple Macintosh they were to ship. Soon enough, Apple canceled their ZFS announcement, canceled their open-source ZFS announcement, placed job ads for people to create a new file system, and went into hybernation for years.

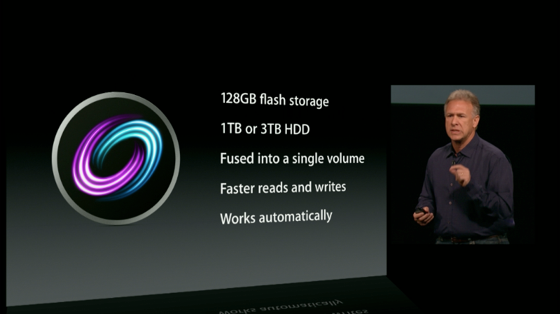

Apple recently released a Mac OSX feature they referred to as their Fusion Drive. Mac Observer noted:

"all writes take place on the SSD drive, and are later moved to the mechanical drive if needed, resulting in faster initial writes. The Fusion will be available for the new iMac and new Mac mini models announced today"Once again, the market was hit with an innovative product, bundled into an Operating System, not requiring proprietary software.

Implications for Network Management:

With continual improvement in storage technology, this will place an interesting burden on Network, Systems, and Storage Management vendors. How does one manage all of these solutions in an Open way, using Open protocols, such as SNMP?

The vendors to "crack" the management "nut" may be in the best position to have their product accepted into existing heterogeneous storage managed shops, or possibly supplant them.

Subscribe to:

Posts (Atom)