Abstract:

Every so often, when working on a Sun server, it is helpful to know the positioning and speed of the CPU boards, to plan for better upgrades. This article takes a few common machines and provides some basic, simple to read instructions, for determining CPU capabilities.

Sun V490 (SUNW,Sun-Fire-V490)

Introduction

The Sun Microsystems server Sun Fire V490 was a machine on the high end of the workgroup servers. This server has a 2 CPU board capacity, where each CPU board holds 2 sockets, where each socket typically holds 2 cores.

These are not Intel cores, but each core addition to the

socket increase performance close to linearly (instead of by 50% in

Intel or AMD sockets of this age.)

Determining Class

The "uname" provides for an easy way to know the class of machine.

sun1316$ uname -a

SunOS sun1316 5.9 Generic_122300-57 sun4u sparc SUNW,Sun-Fire-V490

The name of the platform is a "Sun-Fire-V490", hinting this chassis is capable of 4 sockets. The "90" indicates it was an UltraSPARC IV based machine, which was capable of dual-cores. (The chassis is also compatible with older single board UltraSPARC III processors.)

Determining Boards

The psrinfo command is available in the /usr/sbin directory.

sun1316$ /usr/sbin/psrinfo

0 on-line since 07/15/2012 02:31:01

1 on-line since 07/15/2012 02:31:01

2 on-line since 07/15/2012 02:31:01

3 on-line since 07/15/2012 02:31:00

16 on-line since 07/15/2012 02:31:01

17 on-line since 07/15/2012 02:31:01

18 on-line since 07/15/2012 02:31:01

19 on-line since 07/15/2012 02:31:01

Processors 16 and over are an indication that this socket is a dual-core

socket. On this chassis, sockets 0-1 are located on board 1, while

sockets 2-3 are located on board 1. Both boards are populated.

Determining Performance

The psrinfo with a "-v" option will provide additional information, such as the speed of the individual cores.

sun1316$ psrinfo -v

Status of virtual processor 0 as of: 07/19/2012 22:43:35

on-line since 07/15/2012 02:31:01.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 1 as of: 07/19/2012 22:43:35

on-line since 07/15/2012 02:31:01.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 2 as of: 07/19/2012 22:43:35

on-line since 07/15/2012 02:31:01.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 3 as of: 07/19/2012 22:43:35

on-line since 07/15/2012 02:31:00.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

...

In the above example, I trimmed the output of the second cores on the 4 sockets, since the information is identical to the first 4 cores on each socket.

Sun V890 (Sun-Fire-V890)

Introduction

Sun V890 (Sun-Fire-V890)

Introduction

The Sun V890 was a machine square in the high end of the workgroup servers. The server has a 4 CPU board capacity, where each CPU board holds 2 sockets, where each socket typically holds 2 cores.

These are not Intel cores, but each core addition to the

socket increase performance close to linearly (instead of by 50% in

Intel or AMD sockets of this age.)

Determining Class

sun1375$

uname -a

SunOS sun1376 5.10 Generic_144488-11 sun4u sparc SUNW,

Sun-Fire-V890

The name of the platform is "V890" hinting at 8 socket capability. The "90" hints it was capable of using dual-core UltraSPARC processors. The chassis was capable of using UltraSPARC III boards.

Determining Boards

The psrinfo command is available in the /usr/sbin directory.

sun1376$ psrinfo

0 on-line since 05/16/2011 14:26:00

1 on-line since 05/16/2011 14:26:00

2 on-line since 05/16/2011 14:26:00

3 on-line since 05/16/2011 14:26:00

4 on-line since 05/16/2011 14:26:00

5 on-line since 05/16/2011 14:26:00

6 on-line since 05/16/2011 14:26:00

7 on-line since 05/16/2011 14:25:55

16 on-line since 05/16/2011 14:26:00

17 on-line since 05/16/2011 14:26:00

18 on-line since 05/16/2011 14:26:00

19 on-line since 05/16/2011 14:26:00

20 on-line since 05/16/2011 14:26:00

21 on-line since 05/16/2011 14:26:00

22 on-line since 05/16/2011 14:26:00

23 on-line since 05/16/2011 14:26:00

Processors 16 and over are an indication that this socket is a dual-core

socket. On this chassis, sockets 0-1 are located on board 1, sockets 2-3 are located on board 2, sockets 4-5 on board 3, sockets 6-7 on board 4. All boards are populated.

Determining Performance

The psrinfo with a "-v" option will provide additional information, such as the speed of the individual cores.

sun1376$ psrinfo -v

Status of virtual processor 0 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 1 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 2 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 3 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 4 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 5 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 6 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:26:00.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 7 as of: 07/19/2012 22:55:41

on-line since 05/16/2011 14:25:55.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

...

In the above example, I trimmed the output of the second cores on the 4

sockets, since the information is identical to the first core on each

socket. Note, the cores ran at 1.5GHz.

Sun E2900 (SUNW,Netra-T12)

Introduction

The Sun E2900 was a machine on the cusp of between a workgroup and a midrange server. This server has a 3 CPU boards capacity, where each CPU board holds 4 sockets, where each socket typically holds 2 cores.

These are not Intel cores, but each core addition to the socket increase performance close to linearly (instead of by 50% in Intel or AMD sockets of this age.)

Determining Class

The "uname" provides for an easy way to know the class of machine.

sun1142$ uname -a

SunOS sun1142 5.10 Generic_138888-07 sun4u sparc SUNW,Netra-T12

The name of the platform is a "SUNW-Netra-T12", hinting this chassis is capable of 12 sockets. The "900" in the 2900 model is a hint indicating this chassis is capable of using UltraSPARC IV dual-core CPU's. (The chassis is also compatible with older single board UltraSPARC III processors.)

Determining Boards

The psrinfo command is available in the /usr/sbin directory.

sun1142$ /usr/sbin/psrinfo

0 on-line since 02/12/2012 02:27:26

1 on-line since 02/12/2012 02:27:42

2 on-line since 02/12/2012 02:27:42

3 on-line since 02/12/2012 02:27:42

8 on-line since 02/12/2012 02:27:42

9 on-line since 02/12/2012 02:27:42

10 on-line since 02/12/2012 02:27:42

11 on-line since 02/12/2012 02:27:42

512 on-line since 02/12/2012 02:27:42

513 on-line since 02/12/2012 02:27:42

514 on-line since 02/12/2012 02:27:42

515 on-line since 02/12/2012 02:27:42

520 on-line since 02/12/2012 02:27:42

521 on-line since 02/12/2012 02:27:42

522 on-line since 02/12/2012 02:27:42

523 on-line since 02/12/2012 02:27:42

Processor 512 and over are an indication that this socket is a dual-core socket. On this chassis, sockets 0-4 are located on board 1, while sockets 8-11 are located on board 3. Board 2 is missing.

Determining Performance

The psrinfo with a "-v" option will provide additional information, such as the speed of the individual cores.

sun1142$ /usr/sbin/psrinfo -v

Status of virtual processor 0 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:26.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 1 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 2 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 3 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 8 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 9 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 10 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 11 as of: 07/19/2012 22:20:39

on-line since 02/12/2012 02:27:42.

The sparcv9 processor operates at 1950 MHz,

and has a sparcv9 floating point processor.

...

Note, on this chassis, a board runs at a uniform clock rate across all sockets and cores, so only one core per board is needed, but I ignored the second cores in each socket to shorten the above example. Board 1 and Board 3 both use 1.95GHz clock rate. 2.1 GHz is the fastest board which can be purchased for this chassis.

An example of a completely filled chassis with differing speed CPU boards is as follows:

sun1143$ /usr/sbin/psrinfo -v

Status of virtual processor 0 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:08.

The sparcv9 processor operates at 1200 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 1 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1200 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 2 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1200 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 3 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1200 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 8 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 9 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 10 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 11 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1350 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 16 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 17 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 18 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

Status of virtual processor 19 as of: 07/19/2012 22:32:18

on-line since 07/17/2012 11:27:26.

The sparcv9 processor operates at 1500 MHz,

and has a sparcv9 floating point processor.

...

Note, in the above example, I cut out the second cores, to simplify the output. It can be seen that the 3 CPU boards are running at 1.5GHz, 1.35GHz, and and 1.2GHz

Network Management

Most network management platforms require excessive uptime and outstanding expandability. One of the reasons for choosing platforms such as these would be to have robust platforms which will survive for the duration of a contract with an end customer. This may be 3-5 years.

Many of these mid-range platforms are coming towards end of managed services contract, and there is a lot of horse power left in them, to provide services for the next 3-5 year range. Adding a new CPU and Memory card can extend the capital investment of the asset for years to come, if you know which platforms can be extended, and know which platforms to retire.

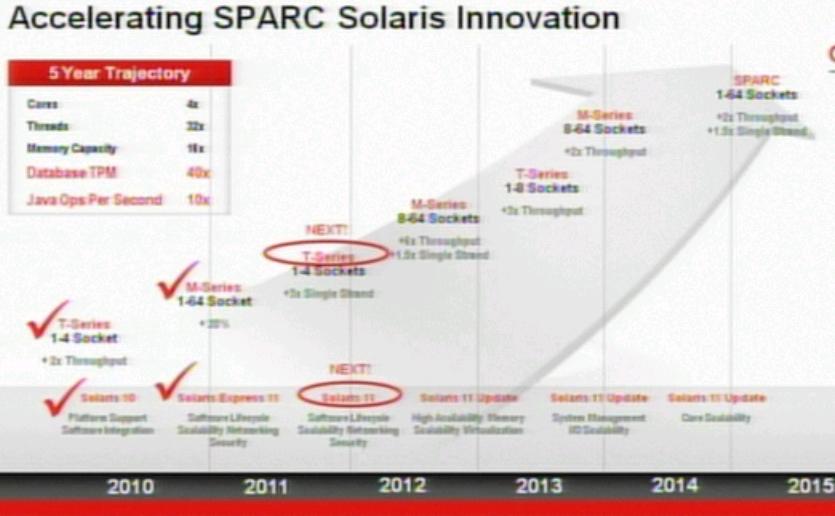

Often, older high-end platforms are rotated to become development platforms, while older low-end platforms are retired. One of the difficulties experienced by network management providers center around the ISV's, who have had their legs chopped out from under them, by Oracle not releasing Solaris 11 under these UltraSPARC units. Some ISV's have just chosen to stop developing for SPARC Solaris because the barrier to entry is now too high (must buy new development and new production SPARC hardware... not to mention the lack of inexpensive Solaris SPARC desktops.)

Why bother developing network management applications for Solaris SPARC? The next generation of SPARC processors are terrific: crypto engines, 128 slow threads, 64 fast threads, binary compatibility for nearly forever, future 128 fast threads, future 8 socket platforms, built in gigabit ethernet in the CPU socket, etc.

It is good to know that the SPARC also has a second supplier, which bailed out Sun Microsystems a number of years ago, when they were not doing so well with their advanced processor lines. It is comforting to know that viruses and worms seldom target SPARC. It is also good to know that a platform which is booted yearly provides availability unlike most others - and Network Management is all about Availability.