Free 4G Wireless Internet?Abstract:

Free 4G Wireless Internet?Abstract:Wireless cellular or packet protocols are typically described by different categories, the higher the category the faster the performance. The categories are loosely defined by the

International Telecommunications Union-Radio communications sector (ITU-R) and organized by Generation. The first vendor has appeared on the market to support free 4G.

Wireless History:

Wireless History:New wireless generations seem to be appearing regularly every 10 years since the 1980's, with the latest being 4G.

0G - Mobile Radio Telephone, appearing in 1946

1G - Analog, 22kb/s-56kb/s, appearing in 1981

2G - Digital, 56kb/s-236.8 kbit/s, appearing in 1992

3G - Multi-Media, 200kbp/s peak rate, appearing in 2001

4G - Packet based Internet Protocol, 1 gigabit peak rate, 2010-2011

It should be noted: there is a wide gap between 3G and 4G, as far as capacity is concerned. There are many intermediate steps, which vendors have branded

3.5G,

3.75G, or even as 4G (if the technology has on it's "roadmap" the ability to meet 4G specifications, as

WiMAX has done.)

Internet Access:

Internet Access:The Internet was a term coined with access to the U.S. Military Department of Defense's TCP/IP network. Early on, this was done through cooperation between different U.S. government organizations as well as through the public and private university systems within the United States.

Regular public American citizenry started gaining access to The Internet in the 1990's via

dial-up access, providing 300b/s-56kb/s. Various corporations managed to raise enough investment resources to provide this access. In the late 1990's,

free dial-up internet services started to become available, through corporations like: NetZero and FreeServe. As users started to migrate from dial-up to broardband (see later),

lawsuits started to be filed between major players in a shrinking market (like NetZero and Juno) resulting in

consolidation and creation of United Online (NetZero and Juno created the second largest internet access provider.) Towards the end of popular dialup access the internet, major providers included: AOL, United Online, MSN, Earthlink, AT&T Worldnet.

Performance was enhanced in the 2000's via

broadband or high-speed access, commonly via DSL, Satellite, and Cable. The telco market was regulated, forcing them to allow access from third-party internet service providers (ISP's.) In order to encourage quicker adoption of faster technology, the regulations were loosened, consolidating internet access to several cable, several telco, and several satellite providers. Free service broadband providers never were able to be profitable.

Internet Access and Wireless Convergence:

Internet Access and Wireless Convergence:Internet access became possible via diverse wireless telco networks, as the wireless telephone companies became more diverse, wiress data access became more desirable, and the back-haul links to the cell towers became more robust.

Internet access based upon cellular networks started becoming more competitive.

Free Internet Access over Wireless:

Free Internet Access over Wireless:The local area network WiFi protocol has become nearly ubiqutous, with locations offering free internet access via WiFi in hotels, coffee shops, book stores, and even automobile service stations.

The drawback to this methodology is that people must remain in a fairly confined area. This restriction has been pretty reasonable for many people, just as "free beer" may only be available at a frat house.

Free internet access provider, Net Zero, helped to pave the way for free internet in the dial-up. United Online is now prepared to offer

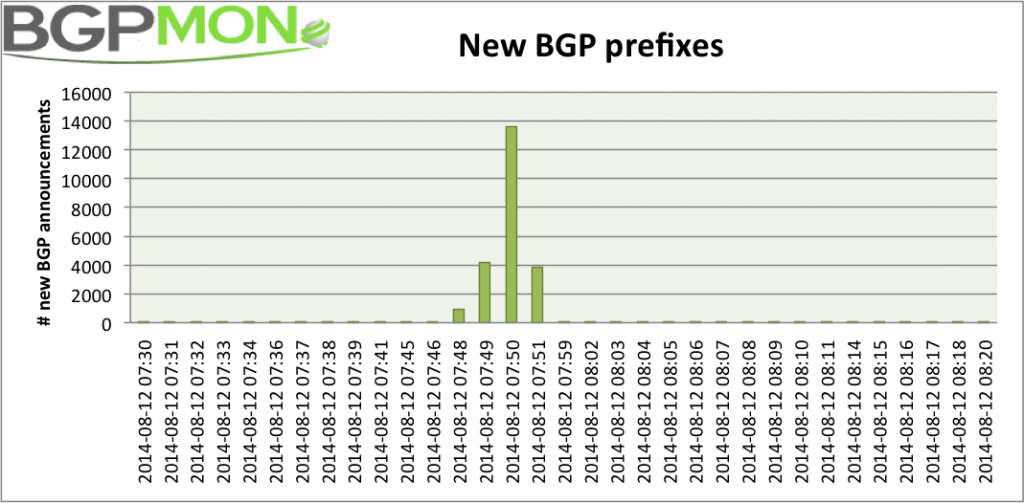

free ineternet access over 4G via it's NetZero subsidiary - with the purchase of equipment and for a period of 1 year (for 200MBytes of data.) After the first year, the $9.95 plan must be purchased, providing for 500MBytes of data. Using WiMAX technology, now being billed as a 4G technology, people can walk or drive around and have access to the internet.

The drawback is clear: with the purchase of the hotspot or USB dongle, Internet is only free for 1 year. No one has a right to complain how long something is free, the consumer just needs to decide how good of a deal it is for them.

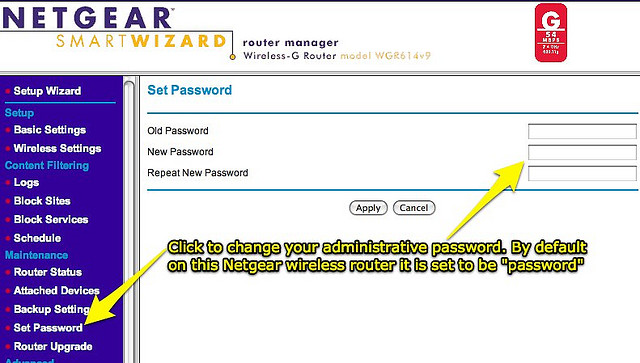

Network Management Connection:

Network Management Connection:With the rapid expansion of wireless as an access mode and the rapid cost reduction in internet access for wireless devices, inexpensive and massively scalable network management tools will become a requirement.