Abstract:

Solaris 10 was launched in 2005, with ground-breaking features like: DTrace, SMF (Services), Zones, LDom's, and later ZFS. The latest, and perhaps last, update of Solaris 10 was expected in 2012, to co-inside with an early release of the SPARC T5. In 2013, Oracle released yet another update, suggesting the T5 is close to release. The latest installment of Solaris 10 is referred to as 01/13 release, for January 2013, appears to be the final SVR4 Solaris release, with expected normal Oracle support extending to 2018. Many serious administrators will refer to this release as Solaris 10 Update 11.

|

| (Oracle SPARC & Solaris Road Map, 2013-02-11) |

What's New?

Oracle released the "Oracle Solaris 10 1/13 What's New" document, outlining some of the included features. The arrangement of the categories seems odd, in some cases, so a few were merged/re-orded below. Some of the interesting features include:

|

| (Solaris 10 Update 11 Network File System Install Media Option) |

- Installation Enhancements

iSCSI LUN boot support

NFS (Network File System) media support

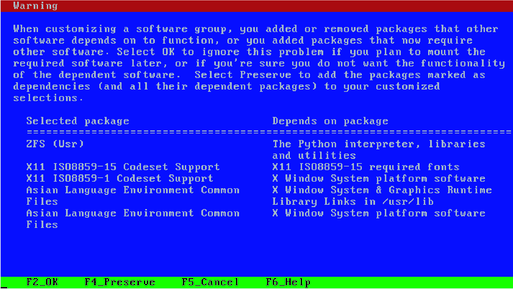

SVR4 Package Dependencies with Installer Preserve Option

LU (Live Update) preserves Dump slice

LU Pre-Flight Checker

|

| (Solaris 10 Update 11 SVR4 Package Dependency Install Support) |

- Administration Enhancements

OCM (Oracle Configuration Manager) Client Service

Oracle Zones Pre-Flight Checker

SVR4 pkgdep (Package Depends) Command

Intel x86 FMA (Fault Management Architecture) Sandy Bridge EP Enhancements

AMD MCA (Machine Check Architecture) Support for Family 15h, 0Fh, 10h

# zfs help The following commands are supported: allow clone create destroy diff get groupspace help hold holds inherit list mount promote receive release rename rollback send set share snapshot unallow unmount unshare upgrade userspace(Solaris 10 Update 10 zfs help system enhancements)

- Security Enhancements

64 bit OpenSSL Support

RESTRICTIVE_LOCKING now optional

Speed Tuning/Improvements for: SSH, SCP, SFTP

# zpool help

The following commands are supported:

add attach clear create destroy detach export get

help history import iostat list offline online remove

replace scrub set split status upgrade

# zfs help create

usage:

create [-p] [-o property=value] ...

create [-ps] [-b blocksize] [-o property=value] ... -V (Solaris 10 Update 10 zpool help system enhancements)

- ZFS File System and Storage Enhancements

Help tiered into sub-commands for: zfs, zpool

ZFS aclmode enhancements

ZFS diff enhancements

ZFS snap alias for snapshot

Intel x86 SATA (Serial ATA) support for ATA Pass-Through Commands

AMD x86 XOP and FMA Support

SPARC T4 CRC32c Acceleration for iSCSI

Xen XDF (Virtual Block Device Driver) for x86 Oracle VM

# zfs help create

usage:

create [-p] [-o property=value] ...

create [-ps] [-b blocksize] [-o property=value] ... -V (Solaris 10 Update 10 zpool help create system enhancements)

- Freeware Enhancements

Evince, GNU Make, GNU gettext, GNU IDN (International Domain Naming), Ghostscript, gzip, Jakarta Tomcat, Lightning, rsync, Samba, Sendmail, Thunderbird, Firefox, wxWidgets - New and Updated Device Drivers

Broadcom BNXE (BCM57712 NetXtreme II 10 Gigabit Ethernet) Driver new support

Intel SR-IOV (Single Root I/O Virtualization) support for i350 Gigabit and x540 10 Gigabit

Sun Blade 6000 Virtualized 40 GbE NEM (Network Express Module)

USB 3.0 Support (Block File Throughput: 150MBps Intel/AMD; 50MBps SPARC) - Virtualization Enhancements

LDom / Oracle VM SPARC Memory Dynamic Reconfiguration (DR) on Migration

Competitive Pressures:

Competition makes the Operating System market healthy! Let's look at the competitive landscape.

|

| (Illumos Logo) |

Solaris USB 3.0 is in a better support position than Illumos still missing USB 3.0 today since Solaris 10, Solaris 11, and Illumos all have top-of-the-line read and write flash accelerators for hard disk storage... a USB 3.0 flash cache will provide a nice inexpensive performance boost! Slower Solaris USB 3.0 support from 2013q1 on SPARC will be shunned with Solaris ZFS SMB's considering Apple MacOSX. Apple released USB 3.0 support in 2012q4 with Fusion Drive, making OSX a strong contender. Apple may have been late to Flash when proper licensing could not be agreed between Sun/Oracle and Apple, Apple is still late with deduplication, but now Oracle and Illumos are late with USB 3.0 to combine with ZFS.

|

| (Lustre logo, courtesy hpcwire) |

Sun purchased Lustre, for ZFS integration back in 2007. NetMgmt salivated as Lustre for ZFS was on-tap back in 2009, ZFS needed cluster/replication for a long time. Redhat purchased GlusterFS in 2011 and went beta in 2012, for production quality filesystem clustering. IBM released ZFS and Luster on their own hardware & Linux OS. NetMgt noted Lustre on EMC was hitting in 2012, questioned Oracle's sluggishness, and begged for an Illumos rescue. Even Microsoft "got it" when Windows 2012 bundled: dedupe, clustering, iSCSI, SMB, and NFS. It seems Apple, Oracle, and Illumos are the last major vendors - late with native file system clustering... although Apple is not pretending to play on the Server field.

|

| (Superspeed USB 3.0 logo, courtesy usb3-thunderbolt.com) |

The lack of File System Clustering in the final update of Solaris 10 is miserable, especially after various Lustre patches made it into ZFS years ago. Perhaps Oracle is waiting for a Solaris 11 update for clustering??? The lack of focus by Illumos on clustering and USB 3.0 makes me wonder whether or not their core supporters (embedded storage and cloud provider) really understand how big of a hole they have. An embedded storage provider, should would want USB 3.0 for external disks and clustering for geographically dispersed storage their check-list. A cloud provider should would want geographically dispersed clustering, at the least.

|

| (KVM is bundled into Joyent SmartOS, as well as Linux) |

Conclusions:

Oracle Solaris 10 is alive and well - GO GET Update 11!!! Some of the most important features include the enhancements to CPU architecture (is SPARC T5 silently supported, since T5 has been in-test since end of 2013?), USB 3.0, iSCSI support for root disk installations, install SVR4 package dependency support, and NFS media support. Many of these features will be welcomed by SMB's (small to medium sized businesses.)

|

| (Bullet Train, courtesy gojapango) |