|

| [Amazon Web Services Logo, Courtesy Amazon] |

Distributed Denial of Service, Amazon Cloud & Consequences

Abstract

The US Military had been involved in advancing the art of computing infrastructure since the early days of computing. With many clouds built inside the Pentagon, a desire to standardize on an external cloud vendor was initiated. Unlike many contracts, where vendors were considered to compete with one another for a piece of the pie, this was a "live and let die" contract, for the whole proverbial pie, not just a slice. Many vendors & government proponents did not like this approach, but the proverbial "favoured son", who had a CIA contract, approved. This is that son's story.Problems of Very Few Large Customers

Very few large customers create distortions in the market.- Many understand that consolidate smaller contracts into very few large contracts is unhealthy. Few very large single consumers, like the Military, create an environment where suppliers will exit the business, if they can not win some business, since the number of buyers is too small, limiting possible suppliers in time of war.

- Some complain that personal disputes can get in the way of objective decision making, in large business transactions.

- Others warn that political partisanship can wreck otherwise potential terrific technology decisions.

- Many complain that only a few large contracts offer opportunity for corruption at many levels, because the stakes are so high for the huge entities trying to gain that business.

- In older days, mistakes by smaller suppliers gave opportunity for correction, before the next bid... but when very few bids are offered, fleeting opportunities require substantially deep pockets to survive a bid loss

- Fewer customer opportunities discourages innovation, since risk to be innovative may result in loss of an opportunity when a few RFP providers may be rigidly bound by restraints of older technology requests and discourages from higher costing newer technology opportunities

|

| [Amazon Gift Card, Courtesy Amazon] |

Amazon's Business to Lose

From the very beginning, Amazon's Jeff Bezos had his way in. Former Defense Secretary James Mattis, hired Washington DC Lobbyist Sally Donnelly, who formerly worked for Amazon, and the Pentagon was soon committed to moving all their data to the private cloud. The irony is that Bezos, who has a bitter disagreement with President Trump, now had a proverbial "ring in the nose" of President Trump's "second in command" with the Armed Forces, in 2017.Amazon's Anthony DeMartino, a former deputy chief of staff in the secretary of defense’s office, who previously consulted for Amazon Web Services, was also extended a job at Amazon, after working through the RFP process.

Features of the Amazon Cloud, suspiciously looked like they were taylor written for Amazon, requesting features that only Amazon could offer. Competitors like Oracle had changed their whole business model, to redirect all corporate revenue into Cloud Computing, to even qualify for the $2 Billion in revenue requirement to be allowed to bid on the RFP! How did such requirements appear?

Amazon's Deap Ubhi left the AWS Cloud Division, to work at the Pentagon, to create the JEDI procurement contract, and later return to Amazon. Ubhi, a venture capitalist, worked as 1 of a 4 person team, to shape the JEDI procurement process, while in secret negotiations with Amazon to be re-hired for a future job. The Intercept further reminded us:

Under the Procurement Integrity Act, government officials who are “contacted by a [contract] bidder about non-federal employment” have two options: They must either report the contact and reject the offer of employment or promptly recuse themselves from any contract proceedings.The Intercept also noted that Ubhi accepted a verbal offer from Amazon, for the purchase of one of his owned companies, during the time of his working on the Market Research that would eventually form the RFP.

A third DoD individual, tailoring the RFP, was also offered a job at Amazon, according to Oracle court filings, but this person was marked from the record.

At the highest & lowest levels, the JEDI contract appeared to be "Gift-Wrapped" for Amazon.

|

| [Amazon CEO Jeff Bezos hosting Trump's Former Defense Secretary James Mattis at HQ, courtesy Twitter] |

Amazon Navigating Troubled Waters

December 23, 2018, President Trump pushes out Secretary of Defense James Mattis after Mattis offered a resignation letter, effective February 2019.January 24, 2019, Pentagon investigates Oracle concerns unfair practices by hiring Cloud Procurement Contract worker from Amazon.

April 11, 2019, Microsoft & Amazon become finalists in the JEDI cloud bidding, knocking out other competitors like Oracle & IBM.

June 28, 2019, Oracle Corporation files lawsuit against Federal Government for creating RFP rules which violate various Federal Laws, passed by Congress, to restrict corruption. Oracle also argued that three individuals, who tilted the process towards Amazon, who were effectively "paid off" by receiving jobs at Amazon.

July 12, 2019, Judge rules against Oracle in lawsuit over bid improprieties, leaving Microsoft & Amazon as finalists.

August 9, 2019, Newly appointed Secretary of Defense Mark Esper and was to complete "a series of thorough reviews of the technology" before the JEDI procurement is executed.

On August 29, 2019, the Pentagon awarded it's DEOS (Defense Enterprise Office Solutions) cloud contract, a 10-year, $7.6 billion, to Microsoft, based upon their 365 platform.

On October 22, 2019, Secretary of Defense Mark Esper withdrew from reviewing bids on the JEDI contract, due to his son being employed by one of the previous losing bidders.

Serendipity vs Spiral Death Syndrome

Serendipity is the occurrence and development of events by chance with a beneficial results. The opposite may be Spiral Death Syndrome, when an odd event may create a situation where catastrophic failure becomes unavoidable.What happens when an issue, possibly out of the control of a bidder, becomes news during a vendor choice?

This may have occurred with Amazon AWS, in their recent bid for a government contract. Amazon pushed to have the Pentagon Clouds outsourced, at one level below The President and even had the rules written for an RFP, to favor a massive $10 Billion 10 year single contract agreement favoring them.

October 22, 2019, A Distributed Denial of Service (DDoS) hitsAmazon Web Services was hit by a Distributed Denial of Service attack, taking down users of Amazon AWS for hours. Oddly enough, it was a DNS attack, centered upon Amazon C3 storage objects. External vendors measured the outages to last 13 hours.

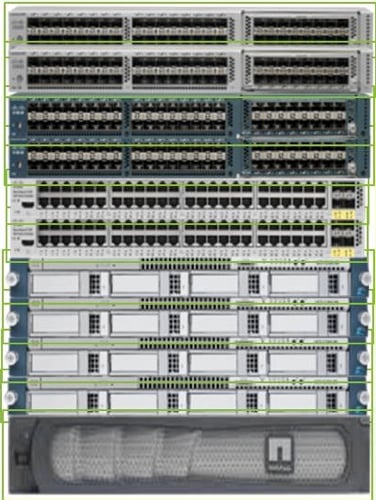

On October 25, 2019, the Pentagon awarded it's JEDI (Joint Enterprise Defense Infrastructure) cloud contract, a 10-year, $10 billion, to Microsoft. The Pentagon had over 500 separate clouds, to be unified under Microsoft, and it looks like Microsoft will do the work, with the help of smaller partners.

Conclusions:

Whether the final choice of the JEDI provider was Serendipitous for Microsoft, or the result of Spiral Death Syndrome for Amazon, is for the reader to decide. For this writer, the final stages of choosing a bidder, where the favoured bidder looks like they could have been manipulating the system at the highest & lowest levels of government, even having the final newly installed firewall [Mark Esper] torn down 3 days earlier, is an amazing journey. A 13 hour cloud outage seems to have been the final proverbial "nail in the coffin" for a skilled new bidder who was poised to become the ONLY cloud service provider to the U.S. Department of Defense.(Full Disclosure: a single cloud outage for Pentagon Data, just before a pre-emptive nuclear attack on the United States & European Allies [under our nuclear umbrella], lasting 13 hours, could have not only been disastrous, but could have wiped out Western Civilization. Compartmentalization of data is critical for data security and the concept of a single cloud seems ill-baked, in the opinion of this writer.)