|

| Image courtesy: WWPI. |

Abstract:

Automatic Storage Tiering or Hierarchical Storage Management is the process of placing the most data onto storage which is most cost effective, while meeting basic accessibility and efficient requirements. There has been much movement over the past half-decade in storage management.

Early History:

When computers were first being built on boards, RAM (most expensive) held the most volatile data while ROM held the least changing data. EPROM's provided a way for users to occasionally change mostly static data (requiring a painfully slow erasing mechanism using light and special burning mechanism using high voltage), placing the technology in a middle tier. EEPROM's provided a simpler way to update data on the same machine, without removing the chip for burning. Tape was created, to archive storage for longer periods of time, but it was slow, so it went to the bottom of the capacity distribution pyramid. Rotating disks (sometimes referred to as rotating rust) was created, taking a middle storage tier. As disks became faster, the fastest disks (15,000 RPM) moved towards the upper part of the middle tier while slower disks (5,400RPM or slower) moved towards the bottom part of the middle tier. Eventually, consumer-grade (not very reliable) IDE and SATA disks became available, occupying the higher areas of the lowest tier, slightly above tape.

Things seemed to settle out for awhile in the storage hierarchy; RAM, ROM, EEPROM, 15K FibreChannel/SCSI/SAS Disks, 7.2K FibreChannel/SCSI/SAS Disks, IDE/SATA, Tape - until the creation of ZFS by Sun Microsystems.

|

| Logo Sun Microsystems |

In the early 2000's, Sun Microsystems started to invest more in flash technology. They anticipated a revolution in storage management, with the increase performance of a technology called "Flash", which is little more than EEPROM's. These became known as Solid State Drives or SSD's. In 2005, Sun released ZFS under their Solaris 10 operating system and started adding features that included flash acceleration.

There was a general problem that Sun noticed: flash was either cheap with low reliability (Multi-Level Cell or MLC) or expensive with higher reliability (Single-Level Cell or SLC). It was also noted that flash was not as reliable as disks (for many write environment.) The basic idea was to turn automatic storage management on it's head with the introduction of flash to ZFS under Solaris: RAM via ARC (Adaptive Read Cache), Cheap Flash via L2ARC (Level 2 Adaptive Read Cache), Expensive Flash via Write Log, Cheap Disks (protected with disk parity & CRC's at the block level.)

|

| Sun Funfire 4500 aka Thumper, courtesy Sun Microsystems |

For the next 5-7 years, the industry experienced hand-wringing with what to do with the file system innovation introduced by Sun Microsystems under Solaris ZFS. More importantly, ZFS is a 128 bit file system, meaning massive data storage is now possible, on a scale that could not be imagined. The OS and File System were also open-sourced, meaning it was a 100% open solution.

With this technology, built into all of their servers, This became increasingly important as Sun released mid-range storage systems based upon this technology with a combination of high capacity, lower price, and higher speed access. Low end solutions based upon ZFS also started to hit the market, as well as super-high-end solutions in MPP clusters.

|

| Drobo-5D, courtesy Drobo |

Recent Vendor Advancements - Drobo:

A small-business centric company called Data Robotics or Drobo released a small RAID system with an innovative feature: add drives of different size, system adds capacity and reliability on-the-fly, with the loss of some disk space. While there is some disk space loss noted, the user is protected against drive failure on any drive in the RAID, regardless of size, and any size drive could replace the bad unit.

|

| Drobo-Mini, courtesy Drobo |

The challenge with Drobo - it is a proprietary hardware solution, sitting on top of a fairly expensive hardware, for the low to medium end market. This is not your $150 raid box, where you can stick in a couple of drives and go.

Recent Vendor Advancements - Apple:

Not to be left out, Apple was going to bundle ZFS into every Apple Macintosh they were to ship. Soon enough, Apple canceled their ZFS announcement, canceled their open-source ZFS announcement, placed job ads for people to create a new file system, and went into hybernation for years.

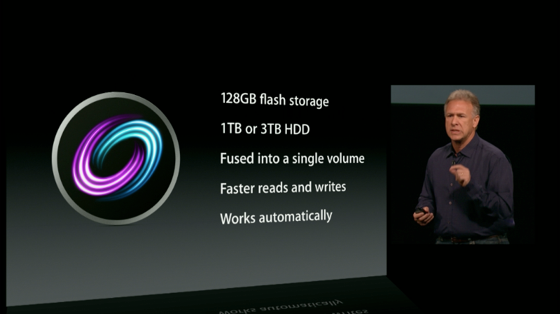

Apple recently released a Mac OSX feature they referred to as their Fusion Drive. Mac Observer noted:

"all writes take place on the SSD drive, and are later moved to the mechanical drive if needed, resulting in faster initial writes. The Fusion will be available for the new iMac and new Mac mini models announced today"Once again, the market was hit with an innovative product, bundled into an Operating System, not requiring proprietary software.

Implications for Network Management:

With continual improvement in storage technology, this will place an interesting burden on Network, Systems, and Storage Management vendors. How does one manage all of these solutions in an Open way, using Open protocols, such as SNMP?

The vendors to "crack" the management "nut" may be in the best position to have their product accepted into existing heterogeneous storage managed shops, or possibly supplant them.

Hierarchical storage management was suggested in the early days because it was the simplest way to lessen the rate of storage for mainframes. Mainframes are pricier than open systems because it calls for global cache architecture. There is now a larger range of mainframe disks at multiple capability and performance, although majority of consumers still opt for compression when transferring a volume to ML 1.

ReplyDeleteRuby Badcoe