System Vendor - CISC, RISC, EPIC Update

Abstract:

Since the decline of the Motorola 68000 CISC processor, RISC processors had been on the rise, to eventually be re-challenged by Intel with the release 80386 (and future models) with a Motorola-like flat memory model. UNIX vendors had standardized on the 68000, migrating to the RISC processors, and occasionally moving back to Intel. There has been the prediction of the decline of RISC, the loss of major processor families like ALPHA and MIPS, decline of POWER, rumor of end of EPIC processor family of Itanium by Intel, but some level of diversity surprisingly continues.

IBM Update: Power 7+

In 1982,

IBM released a 68000 based workstation, based upon a 32/16bit processor. There was a decision to move to x86 on PC form factor, leveraging an existing relationship between Intel for the 8088, reducing cost by using an 16/8 bit processor, and gaining ready 8 bit part availability. This started the business PC market. IBM started to design their own RISC chip, called POWER, for their own UNIX workstations. The POWER multichip CPU modules were physically huge and very costly to manufacture - gluing together multiple chips onto a single carrier socket, limiting production quantities.

Apple-IBM-Motorola consortium started manufacturing PowerPC processors, bring POWER RISC architecture onto Apple desktops through simpler manufacturing process, but Apple discontinue it's use, not long after Apple purchased NeXT (this is the point where IBM POWER lost the desktop market.) In January 2008, IBM starting using QuickTransit, to provide x86 Linux software on their proprietary POWER processor, later ending in

IBM purchasing Transitive. IBM almost purchased Sun, which would have allowed IBM to acquire SPARC, the industry volume leading commodity [non-multichip module] RISC and Solaris, the industry leading UNIX OS vendor.

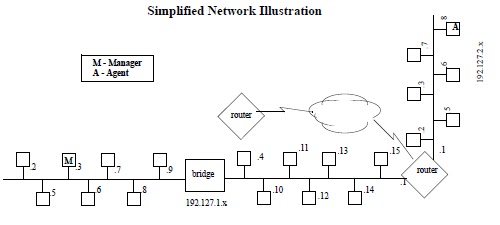

[POWER5 Multi-Chip Module]

It was noted in Network Management end of

August 2011 that POWER 7+ was late.

March 2012, Sony appears to have abandoned IBM POWER - this is when IBM POWER lost the gaming market.

April 2012, IBM POWER 7+ was a half-year late.

May 2012, IBM POWER 7+ was 7 months late. June 2012 - POWER 7+ is now 8 months late. Multi-chip modules are much simpler to bring to market, over chips designed into a single piece of silicon. For IBM to be so late, something bad must have happened. This does not bode well for AIX users.

HP Update: Itanium

In 2007,

HP licensed a Transitive's QuickTransit, to provide Solaris software for HP's Intel based Itanium servers.

Transitive made HP a global distributor in 2008, right before

IBM bought Transitive, killing HP's path to move SPARC software onto x86 Linux or Itanium HP-UX. Itanium was the first, and possibly last, nearly mainstream

Explicitly Parallel Instruction Computing (or EPIC) CPU architecture.

February 2009, HP describes Project Blackbird - HP acknowledges Solaris leading UNIX in United States, Itanium is on a "death march", HP considers purchasing Sun/Solaris.

December 2009, RedHat kills Linux on Itanium.

April 2010, Microsoft kills Windows on Itanium.

December 2010, HP-UX was booting under Intel x86 - Project "Redwood" suggested a "last" Itanium chip in 2014, while recommending funding to move HP-UX to Intel x86.. On

March 2011, Oracle stops new software development on Itanium. In

November 2011, The Register described HP's Project Odyssey - building high-end Intel x86 systems, map Itanium HP-UX features to Intel x86, giving away Itanium/HP-UX software technology to Linux (not available under Itanium), and enhancing Windows with Microsoft. On May 30, 2012, HP revived an old slide dating back to

June 25, 2010 from Project Kinetic, where HP-UX and other HP [OpenVMS and NonStop] operating systems will remain under Itanium, but with a twist: socket-level compatibility between Itanium and x86; a new UNIX will run under both Itanium and x86; driving mid-range features into Intel, Linux, and Windows.

The HP-UX, OpenVMS, and NonStop operating systems look dead because of their dependency on the doomed Itanium, whose architecture seems to have a trajectory to be moved to x86 while the OS's will have their features given to other operating systems. The movement to Solaris might be too late, unless HP decides to fix it's technology gap by partnering with an OpenSolaris distribution, like SGI did (see next section.) HP really needs something like Solaris Branded Zones, to encapsulate all 3 OS's.

SGI Update: OpenSolaris???

This

is a most unusual update. In 1982, SGI was founded, selling UNIX IRIS

Workstations using Mototola 68000 processors. Their OS eventually became

AT&T System V - branded as IRIX. In 1986, the

MIPS R2000 processor was released and incorporated into SGI workstations. In 1991, SGI went 64 bit with

MIPS R4000

processor. SGI abandoned MIPS and moved to Intel Itanium, with their

first Itanium workstation in 2001. In 2006, SGI abandoned Itanium for

Intel x86, stopped developing IRIX.

Rackable purchased SGI in 2009, renaming the entire company back to SGI. One version of the

fall of SGI was recorded here.

Why

go through all this effort, to remember Super Computer and Graphics

Workstation creator SGI? It seems SGI is started to investigate UNIX

again.

SGI is using Nexenta for their SAN solution. Nexenta is based upon

Illumos, formerly based upon

OpenSolaris, which is the basis for Oracle's UNIX -

Solaris 11. SGI embraces Solaris x86, for a portion of their solution, as HP considered in Project Redwood.

Dell Update: ARM???

The only thing stranger than fiction is

reality. Dell would normally never appear in an article like this, but

as other vendors are exiting the non-Intel x86 CISC marketplaces, Dell is

about the only systems vendor who seems to be expanding out of the Intel x86 CISC market!

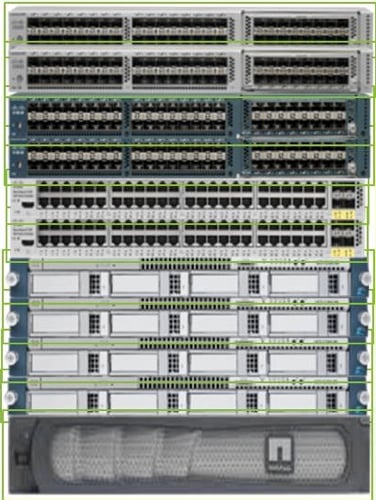

[Dell Quad ARM Server per Blade and Chassis]

Now,

May 29, 2012 - Dell announces a RISC machine, based upon the ARM processor!

Project Copper was bundled under Dell's Enterprise web site tree, which is an indication where they are interested in pushing this new product. Will Dell learn from mistakes by IBM and HP, or corrections by SGI - by bundling a Market Leading UNIX... in the form of an OracleSolaris variant based upon

Illumos?

Does an enterprise or manged service grade OS exist for ARM?

In

June 2009, a release of OpenSolaris for ARM hit the wild. An

example of the OpenSolaris booting on ARM was blogged.

October 2009 the web page was created for the release of OpenSolaris for ARM - bringing the leading UNIX to the ARM processor family. Doug Scott mentioned he was

reviving a port of OpenSolaris to ARM in October 2011 for ZFS on an ARM based SheevaPlug.

In October 2011, ARM announces V8 processor release, migrating ARM from 32bit to 64bit architecture - which is where the OpenSolaris variants have all moved over to. Dell has an excellent opportunity.

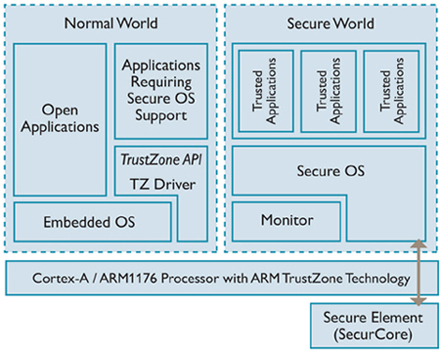

Apple Update:Intel and ARM

This is, perhaps, one of the most interesting computer companies in history. Starting with 8 bit 6502 processors, they move to the Motorola 68000 CISC for their high-end publishing workstation, which they called the Macintosh. After kicking out the CEO & founder, Steve Jobs, Jobs started NeXT computer, based on Motorola 68000 processors and a UNIX core.

[Apple iPhone 4s based upon ARM processor and MacOSX UNIX derivative iOS]

NeXT migrated their UNIX OS to Intel and went from being a workstation vendor to an OS vendor. Apple desperately needed a modern OS and almost went out of business. Apple purchased NeXT (getting the former CEO Steve Jobs back.) The combined company produced a UNIX based desktop with an OS called MacOS X (Macintosh Operating System 10 - based upon a NeXT Step UNIX OS core) placed on top of a PowerPC chip (designed by Apple, IBM, Motorola consortium - called AIM alliance.) Apple almost merged with Sun several times, collaborating on OpenSTEP (an open-sourced NeXT OS) during various aspects of this history. Soon, Apple created the iMac and the company started to turn around.

[Apple iPad2 based upon ARM processor and MacOSX UNIX derivative iOS]

Most recently, Apple went through another migration - moving MacOS X back to it's NeXT Intel code base. Apple started to regain profitability and then they invested in a new set of consumer products. First, was the iPod, then the iPhone, then the iPad. Many of these new devices were based upon the ARM RISC processor, based upon MacOSX, but it was branded iOS. At this point, Apple exploded, becoming the number client vendor on the market, growing to such an extent that they could buy Intel with the spare cash they had on hand. Apple did the nearly impossible: created a new RISC based UNIX ecosystem based upon nothing.

Oracle/Sun: SPARC & Solaris Update

Early on, SUN built their platforms on Motorola 68000 family, as did most workstation vendors. They experimented with x86 for a short while, discontinued them.Solaris 9 was released on Intel, where Intel based UNIX vendors like NCR started migrating to Solaris from their SVR4 platforms like MP-RAS. Solaris 10 was released only on SPARC, Solaris was open-sourced as OpenSolaris (for both Intel and SPARC), and Solaris 11 was released on Intel and SPARC after Oracle purchased Sun. Interestingly, Solaris was being ported to PowerPC for a short period of time, with designers working on a OpenSTEP interface, during a time when Apple was not doing so well. Various Solaris variants, based upon the OpenSolaris project have hit the marketplace, with more distributions being released regularly.

[SunRay 270 Ultra-Thin Client]

From Sun's early history, Sun had traditionally been a 32 bit UNIX workstation vendor, migrated to a 64 bit UNIX workstations, moved from desktop UNIX workstations to UNIX servers, created the ultra-thin SunRay client to replace UNIX desktop workstations based upon 32 bit MicroSPARC, and surprisingly migrated their SunRay platform from MicroSPARC to ARM. Various releases of OpenSolaris had briefly touched ARM, but Solaris had primarily remained focused on SPARC and Intel with the SunRay's being a firmware based system.

[SPARC T5 feature slide, courtesy Oracle on-line presentation]

As variants of RISC and the one EPIC processor have been found to be

losing mind share, there have been two major exceptions: SPARC and ARM.

Oracle continues to make thin-clients based upon ARM, with no roadmap. Oracle committed to a 5 year plan on SPARC, which has been executed either

on-time or early for multiple processors. The SPARC T4 brought fast single-threaded platform with octal cores in 2011. A few months away, the SPARC T5 processor will bring 16 cores (again) to the SPARC family from Oracle, with features including compression and Oracle number processing in hardware.

Fujitsu: SPARC64 Update

Fujitsu is another interesting company, in this article. They did not organically grow into the UNIX movement from Motorola 68000 processors, like most other industry players - Fujitsu co-developed with Sun into the RISC UNIX market.

[Fujitsu SPARC64 VII, used in both Fujitsu and Sun branded mainframe class systems]

SPARC was developed by Sun Microsystems in 1986. Fujitsu fabricated the SPARC 86900 developed by Sun Microsystems, the first SPARC V7 architecture. SPARC International was

founded in 1989, standardizing the 32 bit SPARC V8 multi-vendor

architecture, creating the first non-proprietary RISC mainstream

platforms.

Andrew Heller, head of the RS6000 POWER based UNIX workstation group, left IBM and founded a new company in 1990, HAL Computer Systems, to develop a SPARC processor. In 1991, Fujitsu donated significant funding for a 44% stake, in return to use SPARC chips for their own systems. In 1992, the

SPARClite was produced by Fujitsu. In 1993, Fujitsu purchased the rest of HAL, making Fujitsu the sole driver behind SPARC systems. The 64 bit SPARC V9 architecture was published in 1994 and

Fujitsu shipped their first system in 1995. Fujitsu actually beat Sun to market with the first 64 bit SPARC processor.

[Fujitsu SPARC64 IX fx 16 core CPU floor plan - heart of fastest super computer cluster in the world in 2011-2012]

While other CPU architectures were proprietary, with various corporations suing one another (i.e. Intel suing AMD) - SPARC brought a level of openness to the industry where vendors could cooperate (and occasionally bailed each other out, spreading the risk, while sharing the rewards from the UNIX market.) During a time when Sun's SPARC development pipeline ran dry, Fujitsu provided SPARC64 CPU's for Sun & Fujitsu high-end platforms. Sun purchased a third-party SPARC development house

Afara Websystems, produced the T line of SPARC processors, and jointly sold the SPARC T line with Fujitsu. Solaris is standard on all of these platforms.

[Fujitsu SPARC64 IXfx, 16 core CPU, heart of Fujitsu's PRIMEHPC FX10 - the fastest supercomputer world-wide in 2011-2012]

Fujitsu continues to push ahead with SPARC on their own platforms, holding

the fastest computer in the world for over a year. What makes this a special SPARC is that Solaris is not at it's core - rather Linux is. It seems rather amazing that Linux departed from Intel Itanium, in order to become the OS of choice for the fastest computer in the world, on a Fujitsu SPARC platform.

In Conclusion

IBM POWER is barely breathing, with their latest road mapped CPU being so late that POWER is almost irrelevant, placing tremendous pressure on AIX. Intel Itanium vendors have been abandoning EPIC family for a half-decade with the final vendor closing it's shop. HP-UX is bound to Intel's EPIC Itanium, which is basically dead, with HP announcing development of an unknown new UNIX OS (hopefully, a Solaris fork based Illumos distribution.) Dell is releasing their first RISC platform, without an enterprise UNIX OS, hopefully they will investigate a Solaris fork Illumos distribution. SGI, who abandoned Intel's EPIC Itanum and their UNIX, is partnering with Solaris fork Illumos based distribution on Intel x86.

Oracle has been executing on SPARC, scoring highest performing industry benchmarks. Fujitsu continues to execute on SPARC, holding highest performing super-computer benchmarks. At this point, there is great opportunity for Solaris forked Illumos distribution - if they can get their act together to support SVR4 industry standards.

The UltraSPARC family of processors could be a bridge for Illumos developers to offer Fujitsu SPARC64 support on the fastest computer in the world.

OpenIndiana may be closest to being able to offer such, not to mention get paid for older system support via resellers and new system support from Fujitsu (where Oracle shows little interesting in making Solaris run today.)

ARM offer great opportunities to extend Solaris family of architectures

on the server, especially for Dell, who needs an enterprise OS. Of

course, HP needs a new enterprise OS under the Intel platform.

If

Illumos developers fail to understand how pivotal this point in

time could be - this could be the end of an era and they would only

have themselves to blame for their short-sightedness in not executing on the OpenSolaris source code tree during a very short time period where they can shine the brightest.