ZFS: Flash & Cache 2015q1

Abstract:

The concept of Storage Tiering existed from the time computing came into existence. ZFS was one of the first mainstream file systems to think about automatic storage tiering during it's initial design phase. Advances in ZFS had been made to make better use of cache during recent times.Multiple kinds of Flash?

Flash comes primarily in two different types: highly reliable single-level cell (SLC) memory and multi-level cell (MLC) memory. The EE Times published a technical article describing them.SLC... NAND flash cells... Both writing and erasing are done gradually to avoid over-stressing, which can degrade the lifetime of the cell.

MLC... packing more than one bit in a single flash storage cell... allows for a doubling or tripling of the data density with just a small increase in the cost and size of the overall silicon.

The read bandwidths between SLC and MLC are comparableIf MLC packs so much more data, why bother with SLC? There is no "free lunch", there are differences between SLC and MLC in real world applications, as the IEEE article describes.

MLC can more than double the density [over SLC] with almost no die size penalty, and hence no manufacturing cost penalty beyond possibly yield loss.It is important to understand the capabilities of Flash Technology to determine how to gain the best economics from the technology.

...

Access and programming times [for MLC] are two to three times slower than for the single-level [SLC] design.

...

The endurance of SLC NAND flash is 10 to 30 times more than MLC NAND flash

...

difference in operating temperature, are the main reasons why SLC NAND flash is considered industrial-grade

...

The error rate for MLC NAND flash is 10 to 100 times worse than that of SLC NAND flash and degrades more rapidly with increasing program/erase cycles

...

The floating gates can lose electrons at a very slow rate, on the order of an electron every week to every month. With the various values in multi-level cells only differentiated by 10s to 100s of electrons, however, this can lead to data retention times that are measured in months, rather than years. This is one of the reasons for the large difference between SLC and MLC data retention and endurance. Leakage is also increased by higher temperatures, which is why MLC NAND flash is generally only appropriate for commercial temperature range applications.

ZFS Usage of Flash and Cache

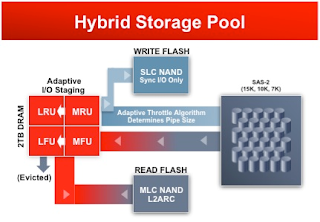

The usage of MLC Cache in a proper storage hierarchy is impossible to omit. The doubling of storage capacity at almost no cost impact is a deal nearly too great to ignore! How does one place such a technology into a storage system?When a missing block of data can result in loss of data on the persistent storage pool, then a highly reliable Flash is required. The ZFS Intent Log (ZIL), normally stored on the same drives as the managed data set, was architected with an external Syncronous Write Log (SLOG) option to leverage SLC NAND Flash. The SLC flash units are normally mirrored and placed in front of all writes going to the disk units. There is a dramatic speed improvement whenever writes are committed to the flash since committing the writes to disk take vastly longer, and those writes can be streamed to disk after random writes were coalesced to Flash. This was affectionately referred to as "LogZilla".

If the data is residing on persistent storage (i.e. disks), then the loss of a block of data merely results in a cache miss, so the data is never lost. With ZFS, the Level 2 Adaptive Read Cache (L2ARC) was architected to leverage MLC NAND Flash. There is a dramatic speed improvement whenever reads hit the MLC before going to disk. This was affectionately referred to as "ReadZilla".

Two things to be cautious about, regarding flash... electrons disappear over time and just reading data can cause corruption of data. To compensate for factors such as these, ZFS was architected with error detection & correction, inherently in the file system.

Performance Boosts in ZFS from 2010 to 2015

ZFS has been running in production for a very long time. Many improvements have been made recently, in order to improve on "State of The Art" of Flash and Disk!Re-Architecture of ZFS Adaptive Read Cache

Consolidate Data and Metadata Lists"the reARC project.. No more separation of data and metadata and no more special protection. This improvement led to fewer lists to manage and simpler code, such as shorter lock hold times for eviction"Deduplication of ARC Memory Blocks

"Multiple clones of the same data share the same buffers for read accesses and new copies are only created for a write access. It has not escaped our notice that this N-way pairing has immense consequences for virtualization technologies. As VMs are used, the in-memory caches that are used to manage multiple VMs no longer need to inflate, allowing the space savings to be used to cache other data. This improvement allows Oracle to boast the amazing technology demonstration of booting 16,000 VMs simultaneously."Increase Scalability through Diversifying Lock Type and Increasing Lock Quantity

"The entire MRU/MFU list insert and eviction processes have been redesigned. One of the main functions of the ARC is to keep track of accesses, such that most recently used data is moved to the head of the list and the least recently used buffers make their way towards the tail, and are eventually evicted. The new design allows for eviction to be performed using a separate set of locks from the set that is used for insertion. Thus, delivering greater scalability.Stability of ARC Size: Suppress Growths, Smaller Shrinks

...

the main hash table was modified to use more locks placed on separate cache lines improving the scalability of the ARC operations"

"The new model grows the ARC less aggressively when approaching memory pressure and instead recycles buffers earlier on. This recycling leads to a steadier ARC size and fewer disruptive shrink cycles... the amount by which we do shrink each time is reduced to make it less of a stress for each shrink cycle."Faster Sequential Resilvering of Full Large Capacity Disk Rebuilds

"We split the algorithm in two phases. The populating phase and the iterating phase. The populating phase is mostly unchanged... except... instead of issuing the small random IOPS, we generate a new on disk log of them. After having iterated... we now can sort these blocks by physical disk offset and issue the I/O in ascending order. "On-Disk ZFS Intent Log Optimization under Heavy Loads

"...thundering herds, a source of system inefficiency... Thanks to the ZIL train project, we now have the ability to break down convoys into smaller units and dispatch them into smaller ZIL level transactions which are then pipelined through the entire data center.ZFS Input/Output Priority Inversion

With logbias set to throughput, the new code is attempting to group ZIL transactions in sets of approximately 40 operations which is a compromise between efficient use of ZIL and reduction of the convoy effect. For other types of synchronous operations we group them into sets representing about ~32K of data to sync."

"prefetching I/Os... was handled... at a lower priority operation than... a regular read... Before reARC, the behavior was that after an I/O prefetch was issued, a subsequent read of the data that arrived while the I/O prefetch was still pending, would block waiting on the low priority I/O prefetch completion. In the end, the reARC project and subsequent I/O restructuring changes, put us on the right path regarding this particular quirkiness. Fixing the I/O priority inversion..."While all of these improvements provide for a vastly superior file system, as far as performance is concerned, there is yet another movement in the industry which really changed the way flash is used in Solaris with ZFS. As flash becomes less expensive, it's use will increase in systems. A laser was placed upon optimizing the L2ARC, making it vastly more usable.

ZFS Level 2 Adaptive Read Cache (L2ARC) Memory Footprint Reduction

"buffers were tracked in the L2ARC (the SSD based secondary ARC) using the same structure that was used by the main primary ARC. This represented about 170 bytes of memory per buffer. The reARC project was able to cut down this amount by more than 2X to a bare minimum that now only requires about 80 bytes of metadata per L2 buffers."

ZFS Level 2 Adaptive Read Cache (L2ARC) Persistence on Reboot

"the new L2ARC has an on-disk format that allows it to be reconstructed when a pool is imported... this L2ARC import is done asynchronously with respect to the pool import and is designed to not slow down pool import or concurrent workloads. Finally that initial L2ARC import mechanism was made scalable with many import threads per L2ARC device."With large storage systems, regular reboots are devastating to the performance of the cache. The process of flushing the cache and re-populating them will shorten the life span of the flash. With disk blocks already existing in L2ARC, performance improve. This also brings the benefit of inexpensive flash media as persistent storage, while competing systems must use expensive Enterprise Flash in order to facilitate persistent storage.

Conclusions:

Solaris continues to advance using engineering and technology to provide higher performance at a lower price point than competing solutions. The changes to Solaris continues to drive down the cost of high performance systems at a faster pace than mere dropping in price of commodity hardware that competing systems depend upon.

No comments:

Post a Comment